Having tossed around the idea that men looked more fit overall during the 1970s and '80s from playing racquet sports, let's take a look at how popular and culturally influential they have been over the decades.

There is a clear long-term cycle of ebbing and flowing popularity, a historical pattern that we ought to find more fascinating than one damned thing after another, one-way rise, or one-way fall. Cycles provide so much variation for us to account for, while limiting our harebrained guesses as to what's causing them, in a way that studying one-way trends cannot. (You can point to any of a zillion other variables that also moved one way during the time, and are generally left with subjective argument about what is "more plausible" to whittle them down to your preferred story).

For this post I'll restrict the focus to how popular racquet sports have been in American culture, although in a future post I might try digging through some data on participation rates across time. First I'll present the basic picture of their popularity, and then throw out some guesses as to why they've cycled in the way they have.

Starting with the present, the most recent craze for racquet sports that I remember vividly was racquetball. You don't hear or see much about it anymore, and it's not as though it's been replaced by a brand new racquet sport. Below is a graph showing how common the word "racquetball" has been in Google's vast library of digitized books and periodicals. (From the Ngram website; click to enlarge.)

It shows tremendous growth during the '70s, a plateau from the later '80s and early '90s, and a steady decline since then. So, at least to judge by the impression it's left in the print media, racquetball was mostly a phenomenon of the '70s and '80s. Most people who have memories from that time will find this unobjectionable, but it's worth trying to document its rise and fall quantitatively.

And it wasn't just writers of books and articles who took notice of the racquetball craze. Iconic scenes can be found in the hit movies Manhattan and Splash (with John Candy smoking during the game, wondering why he can't get into shape), and children could play the sport in video game form on the Atari 2600.

Speaking of iconic '80s movies with Tom Hanks, what about that scene in Big where a fight erupts over a game of paddleball?

I didn't even know what that game was called before doing research for this post. It's played mostly in the New York metro area, and so adds to the movie's sense of regional authenticity, also enhanced by shots of a summertime carnival at a local boardwalk, the toy mecca FAO Schwarz, and a crazy black bum shouting "I'll kill the bitch!" to himself while marching down a Manhattan street.

It looks like paddleball was most popular during the '70s and the early '80s, more or less when racquetball was.

The sport most similar to racquetball, though, is squash -- a name I've heard of for awhile, but never actually seen in real life. It's no wonder, as its heyday is long past. In searching Google's library, I couldn't use just "squash," which has too many meanings, so to narrow it down I searched for "squash rackets". (A graph for "squash racquets" shows basically the same picture.)

Its popularity got going around the turn of the century and peaked in the mid-'30s, and although it has never made a comeback since, its brother-sport did during the '70s and '80s. So, squash was the racquetball of the Jazz Age, yet another point of cultural kinship between the Roaring Twenties and the Go-Go Eighties. The New York Metropolitan Squash Racquets Association was founded in 1924.

However, no sport symbolizes Jazz Age glamour and dynamism more than tennis. The two players below, Rene Lacoste and Suzanne Lenglen, were not only major sports celebrities of their day, but also fashion trend-setters. Lacoste developed the modern polo shirt, and his tenacity on the court earned him the nickname "the Crocodile," an image that still remains in logo form on polo shirts across the world. And Lenglen was part of the broader movement for women to wear less restrictive clothing and to show more skin. She played with bare shoulders and a skirt that revealed the leg above the knee, something unthinkable even a few decades earlier.

Vogue magazine put together a brief review of the influence of tennis on modern clothing styles, one of a series in what they call "turning points" in recent fashion history. Greater participation by women in sports seems to have the greatest effect, since the functional demands of the sport allow them to wear less restrictive clothing than would be possible in an everyday setting. But, once they get used to greater movement, grace, and style on the court -- why not take it off the court too?

This introduction-via-sports makes the shift in everyday styles less abrupt, less confrontational, and less self-conscious. The gradual, "we didn't intend it, but why not?" nature of the transition allows the mainstream to accept it -- not just the men, who generally don't mind seeing a little more skin, but especially the older female guardians of modesty. Indeed, it's the old nuns at the Catholic school, or their matronly counterparts on the streets of Tehran, who most zealously enforce dress codes, to give only two examples of a universal pattern.

As for its popularity in the print culture, tennis shows a clear cycling pattern:

It rises through the mid-late-'30s, falls through the early '60s, rises again to a plateau around the late '80s and early '90s, and has fallen again since. Perhaps not surprisingly, more or less the same pattern shows up for "Ping Pong" as well:

Ping Pong also rises through the mid-late-'30s, falls through the late '50s, picks up again during the '60s to a peak in the mid-'70s, and despite a slump through the earlier '80s, bounces back for one last hurrah through the early-mid-'90s, declining since, though not so dramatically. (You wonder how many of the hits from the last decade are actually part of a piece about beer pong, the boring drinking game.)

The weakest example of the cycle is badminton, perhaps because it's never been very popular in the English-speaking world, and seems to thrive more in East and South Asia. It's also the least explosive of the four major racquet sports. Nevertheless, the pattern isn't so unfamiliar: a rise from the turn of the century through the early '40s, a decline through the early '70s, another rise through the early '80s, and another decline since.

Taking all of these various racquet sports into account, and giving greater weight to the more common ones, the overall smoothed-out pattern seems to go like this: a rise from the turn of the century through the mid-'30s, a decline through the early '60s, another rise through the mid-'90s, and another decline since.

Is there a single variable that shows roughly the same up-and-down timing as the popularity of racquet sports? Yes, and as usual around here, it is the crime rate. The cycle for racquet sports seems to be delayed a few years after the cycle in the crime rate. That could either be due to a delay in reporting on a trend -- still focusing on it even a few years after it's begun to decline, since they can't yet tell the decline is a long-term one -- or a continued interest even a few years into a falling-crime period. Since we're taking a rough look anyway, I'll treat these cycles as happening more or less at the same time.

Why do racquet sports become more popular in rising-crime times, and less in falling-crime times? Well, they are probably part of an even broader pattern of greater interest in sports and fitness during rising-crime times. The turn of the 20th century through the early '30s was witness not only to a craze for tennis, squash, and Ping Pong, but also for baseball, football, golf, sprinting, swimming, and boxing.

Ditto for the '60s through the early '90s -- baseball and especially football shot through the roof, boxing and golf both woke up from their mid-century slumber, aerobics introduced intensive movement to women, everyone piled into the public pool during the summer, and Chariots of Fire revived the Jazz Age love of sprinting.

During the mid-century, and over the last 20 years, Americans seem less interested in sports. You generally don't tennis courts, tennis players, or tennis instructors in an episode of I Love Lucy or The Three Stooges, and these days people are aware of who Tiger Woods and the Williams sisters are, but really couldn't care less. And where is the Mike Tyson phenomenon in mixed martial arts? The few activities that have gained a mainstream following are individualized and non-explosive -- yoga, jogging, "working out" at the gym (although weight-lifting may get intense).

Falling-crime times are more low-key, slow-paced, and even apathetic, so the energy and intensity of sports begin losing their appeal. The doping scandals of the past 20 years have added an extra layer of cynicism that was absent during the mid-century lack of interest, but the fundamental relationship seems to be falling crime leading to greater apathy about sports.

Unlike a safer and safer world, rising-crime times require you to be in a more excitable state, physiologically and psychologically. This is not only to be more aware of possible threats to you yourself, but more importantly to participate in a wider vigilance as neighbors look out for neighbors, and as the great majority band together for mutual support against an increasing chance of being preyed on by criminals.

So, people's minds and bodies are prepared to welcome and even seek out intensive, social sports. They are not part of some narcissistic plan to "look good naked," but the pursuit of overall fitness. Let's face it -- most of us who aren't professional athletes must feel some kind of greater challenge from the environment to get us up and active. And like it or not, one of the best motivators to get into shape is the sense that you may be put physically to the test out there in a potentially violent situation, whether a threat to yourself or standing up for someone else.

We may find the violence itself repellent, but we shouldn't overlook how our responses to the challenge of rising crime ultimately strengthen our minds and bodies, and even our social and cultural bonds. During the '20s and the '80s, it's not as though the murder rate had regressed to pre-modern levels. It was more like the process of bone and muscle-building, where just enough environmental stress is needed to get it into optimal shape, neither overwhelmed and broken nor padded and atrophied.

January 30, 2013

January 29, 2013

The unwholesome mid-century: Clear heels and butt-padded briefs

I just got through with a book on the history of the bra in America, but it'll take awhile to find enough pictures and select quotes to put together a whole post on bras and breasts. In the meantime, I've noticed two other weird contemporary things that turn out to be throwbacks to the mid-century.

First, take a look at Marilyn Monroe's preferred footwear. Lucite heels were big in the 1950s, not just with her. Do a google image search for Marilyn Monroe heels to see many examples, including these two:

Don't ask me what specific features about clear heels make them unwholesome, but strippers couldn't have chosen them for no reason. The mid-century was also big on the burlesque, striptease, girlie show, pin-up, look-but-don't-touch kind of female attitude that we suffer from now. Similar mindsets will choose similar fashion accessories.

In 60 years, do you think it'll be common knowledge, even among those who study the pop culture of our time, that in the early 21st century, clear heels = stripper shoes? Most will probably look at non-strippers wearing them and have no strong reaction of "Why did they wear such sleazy shoes back then?"

That's the temptation when we see Marilyn Monroe wearing them -- hey, it was the '50s, they couldn't have had any unwholesome quality to them. But we don't know that. Maybe Jack Benny did a bit about what's the deal with this craze for slutty lucite heels. How would we ever know about that now? If we're so inclined to judge them unwholesome now, it should go for the mid-century too, not knowing anything more about them.

Second, here's a 1950s ad from Frederick's of Hollywood hawking a pair of briefs with some serious junk-in-the-trunk padding:

They were the major lingerie retailer before Victoria's Secret, so this thing has to be fairly representative of what customers wanted back then. In the 21st century, these things have become profitable again, at least to judge by their being advertised in Southwest Airlines SkyMall catalog.

Here is an earlier post about the cycle in ideal female body type, which tracks the cycle in the crime rate. Rising-crime times select for girls with less T&A, and falling-crime times for more voluptuous girls.

Today we attribute the craze for plump rumps to something having to do with race -- Sir Mix-a-Lot, J. Lo, Beyonce, Shakira, etc. But back in the '80s black girls didn't have jungle booties, so it's not a race thing. Looking back at the mid-century, it definitely was not a race thing. It was Marilyn Monroe, Lana Turner, et al., who women wanted to look like -- shapely white girls. And if women were willing to pad their bra with "falsies" in order to keep up with the ideal (more on that in another post), then why not wear padded briefs too?

I certainly wouldn't mind the sight of women wearing those things, but only if I didn't know what was really underneath. Seeing butt-padded briefs, stuffed bras of one kind or another, shaved pubic hair, etc. -- it all signals a heightened self-consciousness on the girl's part. Like she's so nervous she won't measure up that she has to resort to elaborate tricks to distract and fool the viewer. You can't give off an unwholesome vibe if you lack self-awareness (one of the great things about ditzy girls is how wholesome they appear).

Ultimately any guy who she goes all the way with will see her natural body, so why be misleading in the meantime? These things aren't like make-up: they're making a substantial alteration of her body type. Clearly the bootylicious briefs are designed to deceive or manipulate the perception of men who won't actually get to see the real thing. They're for self-consciously drawing male attention to your tits or ass so that you can string them along and have them do your bidding, while they drool stupidly.

There really does seem to be a cynical, almost Machiavellian approach among women in the mid-century as far as displaying themselves to men was concerned. Like, "Well you know men -- always thinking with their dicks, so if you need to recruit them in reaching some goal of yours, just stuff your bra, pad your seat, and put on a caricatured pin-up smile. Hey, a gal's gotta do what a gal's gotta do."

You can pick this up, less severely, in the lyrics to the early '50s Doris Day song "A Guy Is a Guy". There's a throwaway reference to marriage at the end, but for the most part it sounds cynical, self-aware, even sleazy (without however being obscene like it would today). During the romantic '80s, you didn't hear that kind of attitude. (And no, that "I Know What Boys Like" song doesn't count, since it wasn't a #4 hit on the charts from a major singer, but a dud from one of the few unlikable new wave groups on the fringe.)

Anyway, more to say about these topics when I get around to writing up the stuff about bras.

First, take a look at Marilyn Monroe's preferred footwear. Lucite heels were big in the 1950s, not just with her. Do a google image search for Marilyn Monroe heels to see many examples, including these two:

When did clear heels become the new whore uniform? When did that happen? Was there a big ho convention and all the hos got together and said, "We need something new. Something that just says nasty" … And one girl said, "I got it! Clear heels!" "Uh, girl you’re disgusting!" [Chris Rock]

Don't ask me what specific features about clear heels make them unwholesome, but strippers couldn't have chosen them for no reason. The mid-century was also big on the burlesque, striptease, girlie show, pin-up, look-but-don't-touch kind of female attitude that we suffer from now. Similar mindsets will choose similar fashion accessories.

In 60 years, do you think it'll be common knowledge, even among those who study the pop culture of our time, that in the early 21st century, clear heels = stripper shoes? Most will probably look at non-strippers wearing them and have no strong reaction of "Why did they wear such sleazy shoes back then?"

That's the temptation when we see Marilyn Monroe wearing them -- hey, it was the '50s, they couldn't have had any unwholesome quality to them. But we don't know that. Maybe Jack Benny did a bit about what's the deal with this craze for slutty lucite heels. How would we ever know about that now? If we're so inclined to judge them unwholesome now, it should go for the mid-century too, not knowing anything more about them.

Second, here's a 1950s ad from Frederick's of Hollywood hawking a pair of briefs with some serious junk-in-the-trunk padding:

They were the major lingerie retailer before Victoria's Secret, so this thing has to be fairly representative of what customers wanted back then. In the 21st century, these things have become profitable again, at least to judge by their being advertised in Southwest Airlines SkyMall catalog.

Here is an earlier post about the cycle in ideal female body type, which tracks the cycle in the crime rate. Rising-crime times select for girls with less T&A, and falling-crime times for more voluptuous girls.

Today we attribute the craze for plump rumps to something having to do with race -- Sir Mix-a-Lot, J. Lo, Beyonce, Shakira, etc. But back in the '80s black girls didn't have jungle booties, so it's not a race thing. Looking back at the mid-century, it definitely was not a race thing. It was Marilyn Monroe, Lana Turner, et al., who women wanted to look like -- shapely white girls. And if women were willing to pad their bra with "falsies" in order to keep up with the ideal (more on that in another post), then why not wear padded briefs too?

I certainly wouldn't mind the sight of women wearing those things, but only if I didn't know what was really underneath. Seeing butt-padded briefs, stuffed bras of one kind or another, shaved pubic hair, etc. -- it all signals a heightened self-consciousness on the girl's part. Like she's so nervous she won't measure up that she has to resort to elaborate tricks to distract and fool the viewer. You can't give off an unwholesome vibe if you lack self-awareness (one of the great things about ditzy girls is how wholesome they appear).

Ultimately any guy who she goes all the way with will see her natural body, so why be misleading in the meantime? These things aren't like make-up: they're making a substantial alteration of her body type. Clearly the bootylicious briefs are designed to deceive or manipulate the perception of men who won't actually get to see the real thing. They're for self-consciously drawing male attention to your tits or ass so that you can string them along and have them do your bidding, while they drool stupidly.

There really does seem to be a cynical, almost Machiavellian approach among women in the mid-century as far as displaying themselves to men was concerned. Like, "Well you know men -- always thinking with their dicks, so if you need to recruit them in reaching some goal of yours, just stuff your bra, pad your seat, and put on a caricatured pin-up smile. Hey, a gal's gotta do what a gal's gotta do."

You can pick this up, less severely, in the lyrics to the early '50s Doris Day song "A Guy Is a Guy". There's a throwaway reference to marriage at the end, but for the most part it sounds cynical, self-aware, even sleazy (without however being obscene like it would today). During the romantic '80s, you didn't hear that kind of attitude. (And no, that "I Know What Boys Like" song doesn't count, since it wasn't a #4 hit on the charts from a major singer, but a dud from one of the few unlikable new wave groups on the fringe.)

Anyway, more to say about these topics when I get around to writing up the stuff about bras.

Categories:

Design,

Dudes and dudettes,

Morality,

Pop culture

January 28, 2013

The "Do not never ever buy" list: '90s music revisionism

From here, a Chicago used record store's list of stuff that employees are instructed never ever to buy because the stuff just does not sell.

I doubt there's much of a supply side to the story, like there are just too many of these albums already on shelves. Yeah, for a few of the mega-sellers, but not for most. And then there are mega-sellers from the '60s, '70s, and '80s that are still mega-sellers. This list seems to reflect audience tastes, namely that this music is not worth the money.

I only see a few popular '70s and '80s groups in there -- John Mellencamp, the Eagles, Men at Work, Journey, Foreigner, Boz Scaggs, and Kiss. Edie Brickell, Janet Jackson, and Whitney Houston were popular in the '80s and '90s, so I'm not sure which albums are brought in that never sell. The very early '90s, pre-alternative, aren't going to have many groups listed just because we're only talking about a 2-year period. Still, only Technotronic and C+C Music Factory are listed. EMF, Paula Abdul, etc. -- they must at least be able to sell at the minimum store price.

Then make way for just about every major and not-so-major '90s act -- Melissa Etheridge, 10,000 Maniacs / Natalie Merchant, Big Head Todd, Perry Farrell / Porno for Pyros, Tanya Donnelly, Spin Doctors, K.D. Lang, Veruca Salt, Tripping Daisy, Collective Soul, Alanis Morrisette, Jewel, Soul Asylum, Sting, Stone Temple Pilots… and there's an explicit mention that "Most 90s Bands" are never to be bought, rather than list every one of them separately.

I'm guessing the rest is dorky 2000s indie / emo / etc., although Jessica Simpson is mainstream. I'll bet the pop music of most 2000s bands will wind up on the Never Buy list before too long. There are two great lines that include "obscure punk comps" (compilations) and "everything 'Pitchforky'' - (just getting everyone prepared for the 2010's)". Pitchfork being one of those music nerd websites that try to hype up unlikable noise, dated to the 2000s.

Now, some of these don't deserve their no-love status -- like all the '80s stuff. Whether it's your favorite band or not, it's not never-buy music. But it's also a shame that no one wants to enjoy the Spin Doctors, Soul Asylum, and even 10,000 Maniacs, groups that were the last dying breath of lively music left over from the '80s college rock scene.

Yet the majority of the list has gotten what it deserves. Perhaps the defining feature of '90s music is how over-hyped it was. By now, everyone on the production and consumption side realizes how boring new music is, but at least they aren't aggressively marketing it as a fundamental break with the past that'll blow away all those lamewads who listen to music from 10 years ago.

Go look through Amazon reviews of early-mid-'90s alternative albums, and about half of the ones that include The Historical Context will whine about how everything was Bon Jovi and Poison in the late '80s, and thank god Nirvana, Pearl Jam, and Soundgarden came along to clean house and deliver us into a brave new world of alternative and grunge. Sorry, but those guys were the beginning of the end of the guitar solo, definitely of other instrumental solos like sax, synth, or penny whistle that you heard in the '80s, and of musicianship in general. Not to mention the flat, occasionally agro emotional delivery.

It was all attitude, with little underneath musically or lyrically, just like punk -- another incredibly over-hyped style, verging on affectation. Sure, as the '90s and 2000s wore on, even the raw attitude would evaporate, leaving nothing at all. So I guess Soundgarden wasn't as bad as Nickelback, but they still ultimately sucked.

And anyway, in the late '80s it wasn't just Bon Jovi and Poison. College rock bands became hit sellers -- U2, REM, the B-52's, the Cure. Not to mention less enduring but still popular groups like Fine Young Cannibals, Love and Rockets, Edie Brickell & the New Bohemians, and Suzanne Vega, all of whom landed on the year-end Billboard singles chart. There were still aggressive non-buttrock bands like INXS and Red Hot Chili Peppers well before grunge / alternative took over. And not even a faggot alterna fanboy would lump Guns N Roses in with buttrock. During the late '80s and early '90s, GNR was way more popular and representative than Bon Jovi or Poison.

All that stuff about how Nirvana, etc., were a breath of fresh air is utter bullshit. Only some 10 year-old dork who wasn't tuning in to the radio or MTV would've thought that hair bands were the only beat happening in the late '80s and very early '90s. How else could you have missed the groups listed above unless you were being fed your music pre-digested by someone else? I'm guessing the clueless crusaders were too young to be sampling music on their own, and had an older sibling who was 100% into hair metal. (That describes my middle school friend Andy to a T.)

I never bought that story at the time, A) because I had good memories of buttrock blasting over the car stereo when my babysitters or my friends' cool older brothers would take us for a spin, and B) because I remembered that college rock sound playing within recent memory. From the vantage point of two decades later, I made peace with that early-mid-'90s zeitgeist and put together a list of '90s music worth saving from a fire. Almost none of it sounds grungey, despite the slight-of-hand attempt at the time and even since to lump the life-loving, melodic college rock bands in with the distancing, bland grunge bands.

There is clearly a lot more that needs to be written about the death of rock music, when so much has been devoted to its birth. Hopefully this will provide a start.

I doubt there's much of a supply side to the story, like there are just too many of these albums already on shelves. Yeah, for a few of the mega-sellers, but not for most. And then there are mega-sellers from the '60s, '70s, and '80s that are still mega-sellers. This list seems to reflect audience tastes, namely that this music is not worth the money.

I only see a few popular '70s and '80s groups in there -- John Mellencamp, the Eagles, Men at Work, Journey, Foreigner, Boz Scaggs, and Kiss. Edie Brickell, Janet Jackson, and Whitney Houston were popular in the '80s and '90s, so I'm not sure which albums are brought in that never sell. The very early '90s, pre-alternative, aren't going to have many groups listed just because we're only talking about a 2-year period. Still, only Technotronic and C+C Music Factory are listed. EMF, Paula Abdul, etc. -- they must at least be able to sell at the minimum store price.

Then make way for just about every major and not-so-major '90s act -- Melissa Etheridge, 10,000 Maniacs / Natalie Merchant, Big Head Todd, Perry Farrell / Porno for Pyros, Tanya Donnelly, Spin Doctors, K.D. Lang, Veruca Salt, Tripping Daisy, Collective Soul, Alanis Morrisette, Jewel, Soul Asylum, Sting, Stone Temple Pilots… and there's an explicit mention that "Most 90s Bands" are never to be bought, rather than list every one of them separately.

I'm guessing the rest is dorky 2000s indie / emo / etc., although Jessica Simpson is mainstream. I'll bet the pop music of most 2000s bands will wind up on the Never Buy list before too long. There are two great lines that include "obscure punk comps" (compilations) and "everything 'Pitchforky'' - (just getting everyone prepared for the 2010's)". Pitchfork being one of those music nerd websites that try to hype up unlikable noise, dated to the 2000s.

Now, some of these don't deserve their no-love status -- like all the '80s stuff. Whether it's your favorite band or not, it's not never-buy music. But it's also a shame that no one wants to enjoy the Spin Doctors, Soul Asylum, and even 10,000 Maniacs, groups that were the last dying breath of lively music left over from the '80s college rock scene.

Yet the majority of the list has gotten what it deserves. Perhaps the defining feature of '90s music is how over-hyped it was. By now, everyone on the production and consumption side realizes how boring new music is, but at least they aren't aggressively marketing it as a fundamental break with the past that'll blow away all those lamewads who listen to music from 10 years ago.

Go look through Amazon reviews of early-mid-'90s alternative albums, and about half of the ones that include The Historical Context will whine about how everything was Bon Jovi and Poison in the late '80s, and thank god Nirvana, Pearl Jam, and Soundgarden came along to clean house and deliver us into a brave new world of alternative and grunge. Sorry, but those guys were the beginning of the end of the guitar solo, definitely of other instrumental solos like sax, synth, or penny whistle that you heard in the '80s, and of musicianship in general. Not to mention the flat, occasionally agro emotional delivery.

It was all attitude, with little underneath musically or lyrically, just like punk -- another incredibly over-hyped style, verging on affectation. Sure, as the '90s and 2000s wore on, even the raw attitude would evaporate, leaving nothing at all. So I guess Soundgarden wasn't as bad as Nickelback, but they still ultimately sucked.

And anyway, in the late '80s it wasn't just Bon Jovi and Poison. College rock bands became hit sellers -- U2, REM, the B-52's, the Cure. Not to mention less enduring but still popular groups like Fine Young Cannibals, Love and Rockets, Edie Brickell & the New Bohemians, and Suzanne Vega, all of whom landed on the year-end Billboard singles chart. There were still aggressive non-buttrock bands like INXS and Red Hot Chili Peppers well before grunge / alternative took over. And not even a faggot alterna fanboy would lump Guns N Roses in with buttrock. During the late '80s and early '90s, GNR was way more popular and representative than Bon Jovi or Poison.

All that stuff about how Nirvana, etc., were a breath of fresh air is utter bullshit. Only some 10 year-old dork who wasn't tuning in to the radio or MTV would've thought that hair bands were the only beat happening in the late '80s and very early '90s. How else could you have missed the groups listed above unless you were being fed your music pre-digested by someone else? I'm guessing the clueless crusaders were too young to be sampling music on their own, and had an older sibling who was 100% into hair metal. (That describes my middle school friend Andy to a T.)

I never bought that story at the time, A) because I had good memories of buttrock blasting over the car stereo when my babysitters or my friends' cool older brothers would take us for a spin, and B) because I remembered that college rock sound playing within recent memory. From the vantage point of two decades later, I made peace with that early-mid-'90s zeitgeist and put together a list of '90s music worth saving from a fire. Almost none of it sounds grungey, despite the slight-of-hand attempt at the time and even since to lump the life-loving, melodic college rock bands in with the distancing, bland grunge bands.

There is clearly a lot more that needs to be written about the death of rock music, when so much has been devoted to its birth. Hopefully this will provide a start.

Categories:

Generations,

Music

A break in the clouds of pop music dreariness?

Starbucks has been playing this song for the past week or so, the bassline is so funky and groovy that I can't help but drum my fingers along to it. It's got that mellow disco-sounding guitar, too, at first you'd think they were playing something new wave or pop-in-the-wake-of-disco. However, the lyrics and emotional delivery are part of the whole "Girl, you so fine" ass-kissing genre that you know it must be from the 21st century.

So I tracked down the song based on the repetitive chorus, and it turns out it's by... Bruno Mars. Everything else I've heard by him has been forgettable, but I do keep an open mind. It's just by now I'm used to everything sucking so hard. Occasionally, though, a decent song squeaks through. "Treasure" gets this cranky old man's non-ironic stamp of approval.

Not surprisingly it wasn't released as the lead single (the album came out last December). It'll be a real test of audience coolness if they release it at all and it becomes a club hit -- it's not the kind of beat that an attention whore can shake her ass to while standing still. You actually have to move your legs and body around, and it's too upbeat to dance alone to. I'm guessing it's ahead of its time, although in a few years young people may not be quite so awkward around each other, and this kind of song will catch on.

It feels more and more like we're heading into the mid-'50s phase of our neo-mid-century zeitgeist. So, pop music probably won't sound as bad as it has for the past 20 years. A little bubble-gummy, timid, and probably won't survive whatever comes after it when the neo-Sixties arrives. Still, it's cheerful and infectiously danceable, which is a welcome change.

So I tracked down the song based on the repetitive chorus, and it turns out it's by... Bruno Mars. Everything else I've heard by him has been forgettable, but I do keep an open mind. It's just by now I'm used to everything sucking so hard. Occasionally, though, a decent song squeaks through. "Treasure" gets this cranky old man's non-ironic stamp of approval.

Not surprisingly it wasn't released as the lead single (the album came out last December). It'll be a real test of audience coolness if they release it at all and it becomes a club hit -- it's not the kind of beat that an attention whore can shake her ass to while standing still. You actually have to move your legs and body around, and it's too upbeat to dance alone to. I'm guessing it's ahead of its time, although in a few years young people may not be quite so awkward around each other, and this kind of song will catch on.

It feels more and more like we're heading into the mid-'50s phase of our neo-mid-century zeitgeist. So, pop music probably won't sound as bad as it has for the past 20 years. A little bubble-gummy, timid, and probably won't survive whatever comes after it when the neo-Sixties arrives. Still, it's cheerful and infectiously danceable, which is a welcome change.

Categories:

Music

Racquet sports and overall fitness

(I was going to write a brief introduction to the main topic of the cycles in popularity for racquet sports, but then it got long enough that it felt like a post by itself.)

When you look back at how short men's shorts were in the 1970s and '80s, you have to ask why they didn't feel so self-conscious. Partly because people were more fit back then, including the legs -- not just man boobs and arm bulges from repetitive bench-pressing and curls. And their legs weren't over-developed from repetitive squats.

There was not a very big gym or "workout" culture back then, and yet the guys look non-freakishly fit. I've seen family pictures of my dad hanging out around age 30, and he had that look. So does Chevy Chase when they finally get to Wally World in Vacation, and so does Nick Nolte when scuba-diving in The Deep. If not the gym, how were they staying in shape?

There was a jogging craze around that time, but endurance runners don't get much muscle in the upper leg. In fact, they look pretty haggard. I remember my dad bike-riding a lot back then, although again if you've seen the typical schlub on a bike these days, you know that's not very plausible either. Not casual bike-riding anyway, another endurance sport.

You need some kind of explosive, intensive activity to develop decent leg muscle, and the average guy in the '80s wasn't training to be a football player or wrestler. Rather, what comes to mind is the mania back then for racquet sports that you just don't see anymore. In those sports, you aren't locomoting very much, kind of bouncing or staying prepared to sprint, but when you do move, it's in an intense burst. Every now and then, you're sprinting almost non-stop. And unlike joggers, sprinters develop larger legs. Plus it's not just running at a steady height, you're lunging down and springing back up.

Aside from stressing the upper leg muscles, all that burst-like movement gets your heart going a lot faster, above the threshold where your body recognizes that it's in a world where it's expected to really perform, and so it had better trim off the fat and toughen up the rest. If your activity level stays below that threshold, your body gets the signal that the world it has to interact with isn't so challenging -- just monotonous and never-ending. You improve your endurance, but not much else.

Unlike other sports, the equipment and clothing is pretty cheap for those played with rackets, and you don't need to get together a dozen others just to play a single game. And unlike working out at the gym, playing sports is social and fun. Both for overall psychological and physical fitness, it seems like racquet sports were just what the country was looking for.

When you look back at how short men's shorts were in the 1970s and '80s, you have to ask why they didn't feel so self-conscious. Partly because people were more fit back then, including the legs -- not just man boobs and arm bulges from repetitive bench-pressing and curls. And their legs weren't over-developed from repetitive squats.

There was not a very big gym or "workout" culture back then, and yet the guys look non-freakishly fit. I've seen family pictures of my dad hanging out around age 30, and he had that look. So does Chevy Chase when they finally get to Wally World in Vacation, and so does Nick Nolte when scuba-diving in The Deep. If not the gym, how were they staying in shape?

There was a jogging craze around that time, but endurance runners don't get much muscle in the upper leg. In fact, they look pretty haggard. I remember my dad bike-riding a lot back then, although again if you've seen the typical schlub on a bike these days, you know that's not very plausible either. Not casual bike-riding anyway, another endurance sport.

You need some kind of explosive, intensive activity to develop decent leg muscle, and the average guy in the '80s wasn't training to be a football player or wrestler. Rather, what comes to mind is the mania back then for racquet sports that you just don't see anymore. In those sports, you aren't locomoting very much, kind of bouncing or staying prepared to sprint, but when you do move, it's in an intense burst. Every now and then, you're sprinting almost non-stop. And unlike joggers, sprinters develop larger legs. Plus it's not just running at a steady height, you're lunging down and springing back up.

Aside from stressing the upper leg muscles, all that burst-like movement gets your heart going a lot faster, above the threshold where your body recognizes that it's in a world where it's expected to really perform, and so it had better trim off the fat and toughen up the rest. If your activity level stays below that threshold, your body gets the signal that the world it has to interact with isn't so challenging -- just monotonous and never-ending. You improve your endurance, but not much else.

Unlike other sports, the equipment and clothing is pretty cheap for those played with rackets, and you don't need to get together a dozen others just to play a single game. And unlike working out at the gym, playing sports is social and fun. Both for overall psychological and physical fitness, it seems like racquet sports were just what the country was looking for.

Categories:

Sports

January 24, 2013

Uncovering unwholesomeness in culture throughout history

50 years from now, when people try to figure out what life was like in the early 21st century, how many will know what the Grand Theft Auto video game series was? Or torture porn movies? Or the sado-masochistic character of much mainstream porn? That's just to pick three examples of the general shift toward voyeurism and sensationalism in the culture over the past 20 years.

It may be hard to remember (even more so if you weren't alive), but back in the '80s there was no lurid violence in young people's entertainment. The slasher flick presented all-American teenagers who were mostly likable, or at least sympathetic, although doing what hormone-crazed young people do (or used to do, anyway). This makes you feel for the victims when they get attacked. When the characters are annoying or downright unlikable, you're actually cheering the serial killer on -- "Finally we won't have to listen to that whining bitch anymore!"

Ditto all of the glorification of crime in video games over the past 20 years. In the video games of the '80s and early '90s, where crime was a theme at all, you played the good guys taking on the bad guys, with little or no gore. Kind of like Lethal Weapon with martial arts. Kids increasingly want to role-play as a hoodlum who deals drugs, steals cars, and kills hookers.

And porno movies then featured a guy and a girl who were hot for each other and felt like getting it on, neither one trying to exploit the other, just smiling and having fun. No shock or sensationalism. More and more dirty movies emphasize domination, degradation, and humiliation, whether of the male ("femdom") or of the female (throat gagging). The prevalence of bondage themes is bewildering. How can so many people be so into such degrading stuff?

Yet when people try to reconstruct life in the early 21st century, I'll bet the turn toward sleaze doesn't make the textbooks or popular accounts. From what you learned in US history class, could you give an even basic contrast between the zeitgeist of the 1880s vs. the 1920s? Professional historians love getting into the nitty-gritty, if anything erring on the side of being too particularistic. But the average person, even the average educated person, just doesn't feel like knowing that much about the variations in the historical record is worth anything today. The past must therefore be homogeneous beyond a certain point in time, either uniformly worse than today if they're a progressivist, or uniformly better than today if they're a declinist.

Aside from the past 20 years, the other highpoint of unseen unwholesomeness was the mid-century, especially the '40s and '50s. I don't mean that there was a "seedy underbelly" to the white picket fence suburbs. It was right out in the open, and everyone at the time would've recognized it -- there could have even been a widespread moral panic about it -- but it hasn't been preserved in the popular memory.

The most flagrant example is the sleazy sensationalism of mid-century comic books, featuring just as much lurid torture porn and sado-masochistic imagery as today's dorky video games. I've read some of the secondary literature on this stuff, but today I finally picked up Seduction of the Innocent, a book that ignited a moral panic over the unwholesome nature of comic books back then, and eventually led to the Comics Code Authority, a censorship board within the industry itself, akin to the Hays Code in Hollywood movie studios.

I plan to post in more detail about comic books in particular, since they really were out there back then, and along with radio they were the dominant form of mass media entertainment for young people, like video games today (movie-going was dead, and TV was either non-existent in the earlier part, or slowly gaining viewers by the mid-'50s). But for now, have a look through an online gallery of comic book covers from those days -- and that's not even including the full story inside. The butt-kicking babe, bondage, gore, role-playing as the clever criminal, the intended lack of sympathy running through it all -- it could be straight out of today's youth culture.

Seduction of the Innocent is also available free online; most of the images are not those from the original book, but similar ones that still prove the point. Skim through the chapters named "I Want to be a Sex Maniac" (about the bizarre sexuality so often shown) and "Bumps and Bulges" (about the ads -- increase your bosom size, use this telescope to peep on your neighbors, etc.). You'll never think of the '50s the same way again.

Last, it should go without saying, but "unwholesome" is a property of the cultural item itself -- it doesn't matter what broader social consequences it has. I'm more aware than anyone else of how the violent crime rate kept falling during the mania for lurid S&M comic books, as well as during the neo-sleazy renaissance of the past 20 years. Whether the crime rate shot up or bottomed out in response to comic book sensationalism, the things themselves can't but strike you as unwholesome and sleazy, something that would appeal to weak, passive, anti-social minds.

Unwholesomeness is deplorable not because of its effects or non-effects on material well-being and safety, but because of what it says about our social and psychological health. It triggers our disgust mechanism, not our harm-avoidance mechanism, but that doesn't make it any less important.

It may be hard to remember (even more so if you weren't alive), but back in the '80s there was no lurid violence in young people's entertainment. The slasher flick presented all-American teenagers who were mostly likable, or at least sympathetic, although doing what hormone-crazed young people do (or used to do, anyway). This makes you feel for the victims when they get attacked. When the characters are annoying or downright unlikable, you're actually cheering the serial killer on -- "Finally we won't have to listen to that whining bitch anymore!"

Ditto all of the glorification of crime in video games over the past 20 years. In the video games of the '80s and early '90s, where crime was a theme at all, you played the good guys taking on the bad guys, with little or no gore. Kind of like Lethal Weapon with martial arts. Kids increasingly want to role-play as a hoodlum who deals drugs, steals cars, and kills hookers.

And porno movies then featured a guy and a girl who were hot for each other and felt like getting it on, neither one trying to exploit the other, just smiling and having fun. No shock or sensationalism. More and more dirty movies emphasize domination, degradation, and humiliation, whether of the male ("femdom") or of the female (throat gagging). The prevalence of bondage themes is bewildering. How can so many people be so into such degrading stuff?

Yet when people try to reconstruct life in the early 21st century, I'll bet the turn toward sleaze doesn't make the textbooks or popular accounts. From what you learned in US history class, could you give an even basic contrast between the zeitgeist of the 1880s vs. the 1920s? Professional historians love getting into the nitty-gritty, if anything erring on the side of being too particularistic. But the average person, even the average educated person, just doesn't feel like knowing that much about the variations in the historical record is worth anything today. The past must therefore be homogeneous beyond a certain point in time, either uniformly worse than today if they're a progressivist, or uniformly better than today if they're a declinist.

Aside from the past 20 years, the other highpoint of unseen unwholesomeness was the mid-century, especially the '40s and '50s. I don't mean that there was a "seedy underbelly" to the white picket fence suburbs. It was right out in the open, and everyone at the time would've recognized it -- there could have even been a widespread moral panic about it -- but it hasn't been preserved in the popular memory.

The most flagrant example is the sleazy sensationalism of mid-century comic books, featuring just as much lurid torture porn and sado-masochistic imagery as today's dorky video games. I've read some of the secondary literature on this stuff, but today I finally picked up Seduction of the Innocent, a book that ignited a moral panic over the unwholesome nature of comic books back then, and eventually led to the Comics Code Authority, a censorship board within the industry itself, akin to the Hays Code in Hollywood movie studios.

I plan to post in more detail about comic books in particular, since they really were out there back then, and along with radio they were the dominant form of mass media entertainment for young people, like video games today (movie-going was dead, and TV was either non-existent in the earlier part, or slowly gaining viewers by the mid-'50s). But for now, have a look through an online gallery of comic book covers from those days -- and that's not even including the full story inside. The butt-kicking babe, bondage, gore, role-playing as the clever criminal, the intended lack of sympathy running through it all -- it could be straight out of today's youth culture.

Seduction of the Innocent is also available free online; most of the images are not those from the original book, but similar ones that still prove the point. Skim through the chapters named "I Want to be a Sex Maniac" (about the bizarre sexuality so often shown) and "Bumps and Bulges" (about the ads -- increase your bosom size, use this telescope to peep on your neighbors, etc.). You'll never think of the '50s the same way again.

Last, it should go without saying, but "unwholesome" is a property of the cultural item itself -- it doesn't matter what broader social consequences it has. I'm more aware than anyone else of how the violent crime rate kept falling during the mania for lurid S&M comic books, as well as during the neo-sleazy renaissance of the past 20 years. Whether the crime rate shot up or bottomed out in response to comic book sensationalism, the things themselves can't but strike you as unwholesome and sleazy, something that would appeal to weak, passive, anti-social minds.

Unwholesomeness is deplorable not because of its effects or non-effects on material well-being and safety, but because of what it says about our social and psychological health. It triggers our disgust mechanism, not our harm-avoidance mechanism, but that doesn't make it any less important.

Categories:

Books,

Crime,

Design,

Dudes and dudettes,

Morality,

Pop culture,

Violence

January 22, 2013

The return of sincerity, now and then: A backlash against the Christmas sweater backlash

I don't know if you guys visited any clothing or department stores when you were out gift shopping, but I couldn't believe how many winter / ski / Nordic / Fair Isle / Christmas sweaters were on display non-ironically. Actually, not very many per store, but still -- greater than zero.

And not just in the Polo and Tommy Hilfiger sections, where they've always remained for aspiring preppies. Even Urban Outfitters had about a half dozen. I picked up two that had a cool American Southwest feel, whether in the color palette or the pattern, our own regional version of a Northern European tradition.

Well, that's Urban Outfitters, and they've always catered to the vintage-loving crowd. What about some place more trendy and "fashion-forward" like H&M? They had a few, too, including this cardigan that I snagged as a gift for my mother:

In fact, here is a 2011 post from some fashion site, whose Facebook has over 60,000 likes, daring the readers to go ahead and wear something chic and charming when they attend their friends' ironic ugly sweater party, showing 10 examples. And the snark brigade is so uptight that they'd take it personally -- "oh my gosh, did you seriously just go there? wow, i guess you honestly don't mind being 'that girl' at the party, do you?" Good: nobody deserves having their party pooped on more than the party-poopers.

So, is the 20 year-long decline of sincerity finally done with? Well, it's probably got a few more years to go, but the widespread re-introduction of non-ironic Christmas sweaters is a hopeful sign that it's at least bottoming out.

This takes us back to the '50s all over again. Fair Isle and similar-looking sweaters were all the rage during the 1920s and early '30s, then fell out of favor during most of the mid-century. They went against the minimalist aesthetic of the day, having too much and too many colors, repeating patterns, and the dreaded traditional feel no longer suitable in a World of Tomorrow kind of world. Not to mention the aversion to anything with a hint of sentimentality, in the era of the Three Stooges and the Marx Brothers. Sound familiar?

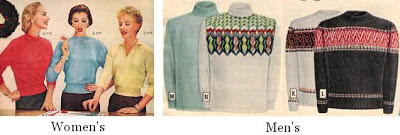

Here is a digitized online collection of old Sears Christmas catalogs. By the 1952 edition, there are zero Christmas sweaters for sale, not for men, not for women, not for children. The next one they have online is the 1956 edition, and by then they'd begun to creep back in, though only for men. Women's sweaters were still just about uniformly devoid of any pattern, or of multiple colors. From the '56 catalog:

Just a few years later, the return has grown even stronger. The caption for the sharp-dressed father and son opens, "Glowing with color, reflecting the warmth and spirit of winter sports... Norwegian ski design favored by color-conscious men." From the '58 catalog:

Through the end of the '50s, women still couldn't break free from their conformist wallflower tendencies and join the men in wearing festive sweaters during the Christmas season. Once the 1960s got going, though, they couldn't resist any longer. The '60 and '61 catalogs are not online, but the one from '62 shows several for women. Most are part of a his-and-hers set, as though they needed to be guided into it, but a few pictures show women wearing Christmas sweaters on their own.

By the mid-1950s, the stifling atmosphere of the previous 20 years (after the death of the Jazz Age) had grown tiresome -- for some, anyway, and most of them men. Not only did the sincere fun of sporting Christmas sweaters begin to make a long-awaited comeback, but they started to grow restless with the corporate slave culture, as shown in the hit novel and movie The Man in the Gray Flannel Suit. Sound familiar? Hopefully it will pretty soon now.

Personally I find it heart-warming to look at the birth years of those involved in this initial push away from mid-century managerialism, obsequiousness, drabness, and insincerity. The major forces behind The Man in the Gray Flannel Suit, both the novel and the movie, The Apartment, The Lonely Crowd, the 30-something men in the Sears catalogs -- almost entirely come from the later part of the Greatest Generation, mostly from the late 1910s through the mid-'20s, with a few going back to the late 1900s.

Based on where they were born in relation to the peak in the crime rate, which largely determines the zeitgeist, those guys correspond to men born mostly from the later half of the '70s through the first half of the '80s, with some going back to the late '60s. I.e., Generation X and the smaller Gen Y. Not the Silents, and not the Millennials.

We're old enough to remember what high spirits our communities used to be in when we were children, we had to suffer a good part of our young adulthood in the opposite place (the '90s and after), yet we're still young enough to feel like there's something left worth fighting for, not just "Well, I had my fun in the '60s, '70s, and '80s, so I can't get angry about where things are headed."

We're now in our 21st year of falling crime rates. Before, the 21st year of falling crime rates was 1954. Seems like the next few years should see the beginnings of the return of sincerity, trust, and openness -- and then a few years after that, rising crime rates, as we allow ourselves to spend more time in vulnerable public spaces, deciding that that's just the risk you have to take to keep from living half of a life holed up at home.

And not just in the Polo and Tommy Hilfiger sections, where they've always remained for aspiring preppies. Even Urban Outfitters had about a half dozen. I picked up two that had a cool American Southwest feel, whether in the color palette or the pattern, our own regional version of a Northern European tradition.

Well, that's Urban Outfitters, and they've always catered to the vintage-loving crowd. What about some place more trendy and "fashion-forward" like H&M? They had a few, too, including this cardigan that I snagged as a gift for my mother:

In fact, here is a 2011 post from some fashion site, whose Facebook has over 60,000 likes, daring the readers to go ahead and wear something chic and charming when they attend their friends' ironic ugly sweater party, showing 10 examples. And the snark brigade is so uptight that they'd take it personally -- "oh my gosh, did you seriously just go there? wow, i guess you honestly don't mind being 'that girl' at the party, do you?" Good: nobody deserves having their party pooped on more than the party-poopers.

So, is the 20 year-long decline of sincerity finally done with? Well, it's probably got a few more years to go, but the widespread re-introduction of non-ironic Christmas sweaters is a hopeful sign that it's at least bottoming out.

This takes us back to the '50s all over again. Fair Isle and similar-looking sweaters were all the rage during the 1920s and early '30s, then fell out of favor during most of the mid-century. They went against the minimalist aesthetic of the day, having too much and too many colors, repeating patterns, and the dreaded traditional feel no longer suitable in a World of Tomorrow kind of world. Not to mention the aversion to anything with a hint of sentimentality, in the era of the Three Stooges and the Marx Brothers. Sound familiar?

Here is a digitized online collection of old Sears Christmas catalogs. By the 1952 edition, there are zero Christmas sweaters for sale, not for men, not for women, not for children. The next one they have online is the 1956 edition, and by then they'd begun to creep back in, though only for men. Women's sweaters were still just about uniformly devoid of any pattern, or of multiple colors. From the '56 catalog:

Just a few years later, the return has grown even stronger. The caption for the sharp-dressed father and son opens, "Glowing with color, reflecting the warmth and spirit of winter sports... Norwegian ski design favored by color-conscious men." From the '58 catalog:

Through the end of the '50s, women still couldn't break free from their conformist wallflower tendencies and join the men in wearing festive sweaters during the Christmas season. Once the 1960s got going, though, they couldn't resist any longer. The '60 and '61 catalogs are not online, but the one from '62 shows several for women. Most are part of a his-and-hers set, as though they needed to be guided into it, but a few pictures show women wearing Christmas sweaters on their own.

By the mid-1950s, the stifling atmosphere of the previous 20 years (after the death of the Jazz Age) had grown tiresome -- for some, anyway, and most of them men. Not only did the sincere fun of sporting Christmas sweaters begin to make a long-awaited comeback, but they started to grow restless with the corporate slave culture, as shown in the hit novel and movie The Man in the Gray Flannel Suit. Sound familiar? Hopefully it will pretty soon now.

Personally I find it heart-warming to look at the birth years of those involved in this initial push away from mid-century managerialism, obsequiousness, drabness, and insincerity. The major forces behind The Man in the Gray Flannel Suit, both the novel and the movie, The Apartment, The Lonely Crowd, the 30-something men in the Sears catalogs -- almost entirely come from the later part of the Greatest Generation, mostly from the late 1910s through the mid-'20s, with a few going back to the late 1900s.

Based on where they were born in relation to the peak in the crime rate, which largely determines the zeitgeist, those guys correspond to men born mostly from the later half of the '70s through the first half of the '80s, with some going back to the late '60s. I.e., Generation X and the smaller Gen Y. Not the Silents, and not the Millennials.

We're old enough to remember what high spirits our communities used to be in when we were children, we had to suffer a good part of our young adulthood in the opposite place (the '90s and after), yet we're still young enough to feel like there's something left worth fighting for, not just "Well, I had my fun in the '60s, '70s, and '80s, so I can't get angry about where things are headed."

We're now in our 21st year of falling crime rates. Before, the 21st year of falling crime rates was 1954. Seems like the next few years should see the beginnings of the return of sincerity, trust, and openness -- and then a few years after that, rising crime rates, as we allow ourselves to spend more time in vulnerable public spaces, deciding that that's just the risk you have to take to keep from living half of a life holed up at home.

Categories:

Cocooning,

Design,

Generations,

Pop culture

January 19, 2013

Neo-Victorian bonnets

The hair on our head is the one clear-cut case of ornamentation like the peacock's tail. It just keeps growing and growing and growing for no reason, other than to get noticed. In infancy, it's short, wispy, and subdued in pigment. And in old age, it's short, wispy, and subdued in pigment. It's only during adolescence and early adulthood that it's thick, long, and richly colored, another sign of hair's role in dating and mating.

It's no surprise then that in cultures where women's sexuality is tightly controlled, they minimize the appearance of their hair. This includes not only cultures where a woman has her look policed by others (typically by older women, not by men, who have more important things to do), but also those where the woman just doesn't feel like displaying her sexual maturity in the first place.

Other parts of her appearance, both natural and artificial, may serve other functions and are not necessarily so rigidly regulated. Colder weather tends to favor more skin being covered up, and hotter weather less. Frequent walking throughout the day selects for shorter hemlines on flowing robe-like clothing, so that strides may be taken less clumsily. But since all that hair seems to serve no other function, it can be pinned up and covered up without affecting the woman's daily activities.

We don't live in a pastoralist culture of honor where women's modesty in appearance is enforced mainly for married women, and where unmarried women are allowed to let their hair down so they can attract a husband before it's too late. Rather, we seem to be moving more toward the agrarian extreme where women don't mind dulling themselves down because they aren't chosen on the basis of looks so much as their ability to produce wealth, whether by toiling out in the fields or by getting a degree and a career. We're becoming less Mediterranean and more East Asian.

And it's not just that girls aren't putting much thought into looking pretty, but are otherwise well adjusted psychologically about their sexuality. They come off as awkward. Just read the faces you see in a google image search for "slouchy hats". Their eyes are lost in that whole hipster fairy-child dream they have, of returning to a pre-pubescent stage and roaming the empty fields alone, or at most with a drab-looking non-boyfriend who won't ever make a move to touch her "down there".

See the lookbook of any store aimed at older teenagers, like Urban Outfitters, American Eagle, or Hollister. The pitch is, "The perfect clothes for indulging your awkwardness and lonesomeness." What's truly bizarre is that they're not targeted at the ugly and socially invisible girls. Everyone in the Millennial generation is pretty dorky, so even the cute girls in the dorm fantasize about the freedom to feel awkward with no one else around to notice them and make them feel, uh, awkward.

Categories:

Cocooning,

Design,

Dudes and dudettes,

Pop culture

January 17, 2013

Teenagers less likely to have a driver's license than any time in the past 50 years

I've already got a few other graphs up like the one below (search for "license"), but now the 2011 data are in from Highway Statistics. The percent of eligible teenagers, 16 to 19, who actually have a driver's license continues to tumble:

At 51% in 2011, and at the rate it's falling, it's probably already below 50% in 2012. In 2011, 28% of 16 year-olds had a license, 45% of 17 year-olds (!), 60% of 18 year-olds, and 69% of 19 year-olds. So now, probably one-third of 19 year-olds do not have a license. How do they go through security at the airport to-and-from college, during spring break, or any kind of trip? If they're going through all the trouble of getting a passport or other government ID, why not just take that time and learn how to fucking drive?

For anyone who has even minimal contact with real teenagers today, this can come as no surprise. They feel so uncomfortable around other people that they'd rather just stay home and wrap themselves in their cyber-cocoon. Feeling so awkward in a social context, they prefer that their friends exist only in a virtual world where they don't have to actually meet up with them, restricting their "interactions" to online video games, Facebook, and texting.

But enough ragging on today's defenseless dorky young people. For this installment, I've managed to dig up some older data that go back to 1963. I didn't cover this before because the driver's license data that far back only say how many people have a license in some age group, not what percent of the age group does. But in the meantime I've gone through lots of data on the age structure of the population, so I can compare the number with a license to the number overall within some age group.

My age structure data groups all 15-19 year-olds together, so I can't look at just the 16+ teenagers. But the driver's license data has a category for anyone 19 and under. Not a huge change in what we're looking at, then.

This graph only goes up through 2010, but the decline over the past 20 years is still evident. The neat thing to notice here is that it wasn't at some constant high value before the decline. That's a common misconception about declines -- that everything had been cruising along nice and steady, when suddenly something disrupted the world and we've been in decline ever since.

As it turns out, teenagers in the early 1960s were about as likely to have a license as their counterparts in the declining period of the late '90s. Before the decline, young people became more and more likely to want a license to drive, before reaching an uneven plateau during the late '70s and the '80s. The protracted decline does not begin until 1990, another example of how cocooning behavior seems to slightly precede a fall in the crime rate. (Indeed, cocooning causes falling crime rates, as the predators have a harder time finding vulnerable prey out in the open.)

Economics does not explain the pattern, as cars have only gotten cheaper during the entire period, and parents only more willing to pay for their kid's insurance and even gas & maintenance. Or more typically, let their kid drive the family car, which again have only gotten cheaper and more ubiquitous over the past 50 years.

What about how youthful the population is? Perhaps when it's growing younger and younger, there's a feeling of excitement in the air, and especially the young people themselves don't want to feel like it's passing them by. Better jump on board while you can, and you need a driver's license to take part in the unsupervised socializing of your fellow teenagers.

Well, close but not quite. Below is a graph of the number of 15-29 year-olds compared to the number of 30-59 year-olds. This ratio reflects the difference between the strength of the force pushing for more excitement, and the opposite force pushing for more containment.

It rises through a peak in the late '70s and begins a steady fall after 1980, yet the sharp drop in driver's licenses doesn't begin for another decade. Also, there's been a steady if small rise in the youthfulness ratio since the early 2000s, yet young people have only withdrawn more and more from other people.

Again, the changes over time look more like the outgoing vs. cocooning pattern. It's more or less the crime rate graph shifted earlier by a few years. For example, youngsters seemed to become more outgoing and rambunctious a few years before the 1959 start of the most recent crime wave. And on the flip-side, they begin to withdraw into their own private spaces a few years before the 1993 start of the falling crime rate. These kind of cycles that are slightly out of synch with each other suggest that the "ecology" of criminals and normal people out in public is like that of predators and prey, perhaps not so shocking of a view but still worth keeping in mind.

At 51% in 2011, and at the rate it's falling, it's probably already below 50% in 2012. In 2011, 28% of 16 year-olds had a license, 45% of 17 year-olds (!), 60% of 18 year-olds, and 69% of 19 year-olds. So now, probably one-third of 19 year-olds do not have a license. How do they go through security at the airport to-and-from college, during spring break, or any kind of trip? If they're going through all the trouble of getting a passport or other government ID, why not just take that time and learn how to fucking drive?

For anyone who has even minimal contact with real teenagers today, this can come as no surprise. They feel so uncomfortable around other people that they'd rather just stay home and wrap themselves in their cyber-cocoon. Feeling so awkward in a social context, they prefer that their friends exist only in a virtual world where they don't have to actually meet up with them, restricting their "interactions" to online video games, Facebook, and texting.

But enough ragging on today's defenseless dorky young people. For this installment, I've managed to dig up some older data that go back to 1963. I didn't cover this before because the driver's license data that far back only say how many people have a license in some age group, not what percent of the age group does. But in the meantime I've gone through lots of data on the age structure of the population, so I can compare the number with a license to the number overall within some age group.

My age structure data groups all 15-19 year-olds together, so I can't look at just the 16+ teenagers. But the driver's license data has a category for anyone 19 and under. Not a huge change in what we're looking at, then.

This graph only goes up through 2010, but the decline over the past 20 years is still evident. The neat thing to notice here is that it wasn't at some constant high value before the decline. That's a common misconception about declines -- that everything had been cruising along nice and steady, when suddenly something disrupted the world and we've been in decline ever since.

As it turns out, teenagers in the early 1960s were about as likely to have a license as their counterparts in the declining period of the late '90s. Before the decline, young people became more and more likely to want a license to drive, before reaching an uneven plateau during the late '70s and the '80s. The protracted decline does not begin until 1990, another example of how cocooning behavior seems to slightly precede a fall in the crime rate. (Indeed, cocooning causes falling crime rates, as the predators have a harder time finding vulnerable prey out in the open.)

Economics does not explain the pattern, as cars have only gotten cheaper during the entire period, and parents only more willing to pay for their kid's insurance and even gas & maintenance. Or more typically, let their kid drive the family car, which again have only gotten cheaper and more ubiquitous over the past 50 years.

What about how youthful the population is? Perhaps when it's growing younger and younger, there's a feeling of excitement in the air, and especially the young people themselves don't want to feel like it's passing them by. Better jump on board while you can, and you need a driver's license to take part in the unsupervised socializing of your fellow teenagers.

Well, close but not quite. Below is a graph of the number of 15-29 year-olds compared to the number of 30-59 year-olds. This ratio reflects the difference between the strength of the force pushing for more excitement, and the opposite force pushing for more containment.

It rises through a peak in the late '70s and begins a steady fall after 1980, yet the sharp drop in driver's licenses doesn't begin for another decade. Also, there's been a steady if small rise in the youthfulness ratio since the early 2000s, yet young people have only withdrawn more and more from other people.

Again, the changes over time look more like the outgoing vs. cocooning pattern. It's more or less the crime rate graph shifted earlier by a few years. For example, youngsters seemed to become more outgoing and rambunctious a few years before the 1959 start of the most recent crime wave. And on the flip-side, they begin to withdraw into their own private spaces a few years before the 1993 start of the falling crime rate. These kind of cycles that are slightly out of synch with each other suggest that the "ecology" of criminals and normal people out in public is like that of predators and prey, perhaps not so shocking of a view but still worth keeping in mind.

Categories:

Age,

Cocooning,

Crime,

Pop culture

January 14, 2013

When else did everybody's names rhyme?

Imagine you're at a party, or hosting one for your kids, and a group of guys introduce themselves as Tyler, Kyler, and Skyler. "Omigosh, that is seriously kind of amazing -- we're Elsie, Kelsey, and Chelsea! You guys just missed Kennedy and Serenity, but I'm sure they'll be back soon..."

I don't know about you, but when there are so many made-up names that rhyme with each other, it doesn't create an it-just-happened-that-way kind of delightfulness. It sounds hamfisted, like forcing a rhyme between "mobster" and "lobster". The evident self-consciousness just makes the whole thing seem phony and off-putting. It's campy, not charming. (By the way, those are all real names in the top 1000 for babies born in 2011.)

I've been looking through how similar the popular names sound over time, and once it's all collected and analyzed, I'll hopefully write something up here. But to show how wildly these things can cycle, let's take a quick look at four years that began four iconic decades -- 1920, 1950, 1980 and 2010. Below are the names in the top 100 for girls that rhyme.

1920 -- 19 rhyme

Ellen, Hellen

Clara, Sarah

Ella, Stella

Bessie, Jessie

Willie, Lillie

Rita, Juanita

Jean, Irene, Eileen, Pauline, Maxine, Kathleen, Geraldine

1950 -- 37 rhyme

Gloria, Victoria

Bonnie, Connie

Anna, Diana

Mary, Sherry

Carol, Sheryl

Carolyn, Marilyn

Ellen, Hellen

Sharon, Karen

Brenda, Glenda

Jane, Elaine

Rita, Anita

Ann(e), Dian(n)e, Joann(e), Suzanne

Jean(ne), Irene, Eileen, Kathleen, Christine, Darlene, Maureen

1980 -- 30 rhyme

Monica, Veronica

Sara(h), Tara

Erin, Karen

Mary, Carrie

Michelle, Danielle

Amy, Jamie

Misty, Christy (Kristy)

Lisa, Teresa

Christine, Kathleen

Leah, Maria

Tina, Gina, Katrina, Christina (Kristina)

2010 -- 36 rhyme

Chloe (Khloe), Zoe(y)

Riley, Kylie

Maya, Mariah

Brianna, Gianna, Arian(n)a

Madison, Addison

Hannah, Anna, Savannah

Isabella, Ella, Gabriella, Bella, Stella

Hailey, Kaylee, Bailey

Layla, Kayla, Makayla

Mia, Leah, Maria, Aaliyah, Amelia, Valeria, Sophia (Sofia)

When you weight the names not only by whether or not they have a rhyming partner, but by how many such partners they have (i.e. just twins or octuplets), the picture is even clearer that 1950 and 2010 were high points in mindless conformity, while 1920 and 1980 were low points. I qualify "conformity" with "mindless" to distinguish it from a meaningful, heart-felt kind. Meeting the neighbor's kids who are named Huey, Dewey, and Louie doesn't fill me up with fellow-feeling -- it just sounds goofy. If anything it's alienating, making me wonder what planet I've landed on.

It's a cheap display of willingness to play on the same team. Actively keeping an eye on each other's kids, hosting them at sleepovers, and sending them around to trick-or-treat would be costly and honest displays of team-mindedness. When people want to cocoon, they have to at least hold up a fig leaf of community spirit, so we get these overly eager, almost caricatured forms of togetherness.

Treat this as news you can use, and hold off having kids until this index starts to fall for awhile. Then you'll know that the rest of society is backing away from hive-like social behavior and returning to neighborly trust, making way for communities worth raising children in.

I don't know about you, but when there are so many made-up names that rhyme with each other, it doesn't create an it-just-happened-that-way kind of delightfulness. It sounds hamfisted, like forcing a rhyme between "mobster" and "lobster". The evident self-consciousness just makes the whole thing seem phony and off-putting. It's campy, not charming. (By the way, those are all real names in the top 1000 for babies born in 2011.)

I've been looking through how similar the popular names sound over time, and once it's all collected and analyzed, I'll hopefully write something up here. But to show how wildly these things can cycle, let's take a quick look at four years that began four iconic decades -- 1920, 1950, 1980 and 2010. Below are the names in the top 100 for girls that rhyme.

1920 -- 19 rhyme

Ellen, Hellen

Clara, Sarah

Ella, Stella

Bessie, Jessie

Willie, Lillie

Rita, Juanita

Jean, Irene, Eileen, Pauline, Maxine, Kathleen, Geraldine

1950 -- 37 rhyme

Gloria, Victoria

Bonnie, Connie

Anna, Diana

Mary, Sherry

Carol, Sheryl

Carolyn, Marilyn

Ellen, Hellen

Sharon, Karen