While browsing around the stacks, I saw a spine labeled Loose Girl: A Memoir of Promiscuity. I thought, Oh great, another one of those intentionally outrageous titles trying to over-hype how sexually active girls are these days, when they'd rather diddle their phone than flirt with boys.

But then "1980s" caught my attention on the first page, referring to how common it was then for adolescents to have divorced parents. Oh OK, this is going to be a look back at a time when sluts and nymphos could still be found stalking the high school hallways. I read the first chapter of about 10 pages and flipped through parts of the rest.

The takeaway is that giving herself over to an endless series of interchangeable guys, whether she truly desired them or not, and rarely enjoying a moment of pause where she wasn't pursuing or hooking up with some guy, came from her need for attention, fear of being alone, and wanting to be taken care of emotionally by someone more effectual, in order to alleviate an ever present gnawing anxiety about being left to fend for herself in an uncaring world. She is a textbook case of codependency.

That's not the kind of personality we'd like to see in our daughters, and such behavior would worry us if she were our friend. Still, these extreme cases are useful to infer how approaching vs. cocooning the general population is. The farther we are toward the approaching and self-denying side, the higher the fraction who will exceed the threshold of codependence. The farther toward the cocooning and self-focused side, the lower the fraction.

Looking at how this fraction grows or shrinks therefore tells us where the entire population is moving toward. In times when the fraction is higher, it doesn't mean the average person is way off in the codependent extreme -- that's a hysterical exaggeration of the same type that says Thank God we're out of those highly homicidal 1980s, as though we all fought off murderers every time we stepped out the door. Rather, it means that the average person was simply more approaching toward others, and was more willing to set aside their personal wants in order to satisfy those of others.

Unfortunately, public surveys don't ask these kinds of questions, let alone over a long time span. However, let's take a look at how common the term "codependency" has been in Google's digitized library of books (Ngram):

You can't tell because of the scale, but there are sporadic hits in the 1960s and '70s, before it soars during the '80s and early '90s. When you un-smooth the curve, the peak year is 1992. And it's come crashing down over the last 20 years. The same pattern shows up no matter what variation on the term you choose.

That fits with the publication of the most popular books on the topic. If you google "codepen..." it will suggest the title of the mega-selling self-help book, Codependent No More: How to Stop Controlling Others and Start Caring for Yourself. Two others by the author on the same topic appear in the suggestions at Amazon. All were published between 1986 and 1990, foreshadowing the reversal of the phenomenon starting in the mid-'90s.

What has replaced the needy, clingy codependent since then? Their opposite: the dismissive avoidant type. In the framework of attachment theory, the clingy type has a view of others that is positive -- others are effectual and deserving of support -- and a view of self that is negative -- ineffectual and unlovable. The dismissive type is their inversion -- others are incapable and worthless, while I'm so awesome at what I do, and you'd have to be delusional not to love me.

We would like for everybody to be within the healthy, normal range of personality and behavior, but in real life those traits are distributed in a bell-shaped curve, so that we're always going to have some kind of extreme cases with us. The open question is -- what type of extreme will they be? I don't know about you, but I'd rather go to school, work, and bed with someone who was needy and self-effacing rather than a dismissive egomaniac.

November 15, 2013

November 14, 2013

The sex trade and inequality, 1920 to present

In earlier posts on the historical cycles of perversion, I looked at how popular culture revealed the audience's preferences. See here for a list of posts on the unwholesome mid-century. Briefly, in times of cocooning people show a lurid interest in sexuality, and in outgoing periods, a jocular attitude.

I'm most interested in what made people tick in different time periods, so I look at the demand side. The supply side is complicated by technological progress, which moves independently of the social-cultural zeitgeist. And in any case, I don't find the production or business side of pop culture as fascinating as what some phenomenon reveals about everyday people.

But for some phenomena, you might have to take into account what factors motivate the people on the supply side. Not so much "what attitudes to business owners and managers have?" but more like what would lead the actual workers to take part in the business that creates the product. Those choices could also reflect the cocooning / outgoing trend, but decisions about economic life will probably respond more to the status-seeking / status-ignoring trend.

Turchin's work on the dynamics of inequality puts status seeking, over-production of aspiring elites, and intra-elite competition behind inequality (an effect of those divisive processes). See this post of his on the topic, using lawyers as a case study. I'll refer to status-seeking or rising-inequality depending on which aspect seems to be more important.

Something I could never explain using just the cocooning vs. outgoing framework is why the mid-century sex culture, while just as lurid as ours is, nevertheless did not specialize in pornographic photography or movies, lapdance clubs, 1-900 phone sex services, and so on. It was more lurid comic books and pulp novels, voyeuristic girlie shows (with no contact or proximity), and the incredibly rare stag film (hardcore). *

The state of their technology allowed for all of our forms of commercialized vice, yet they didn't make nearly as much use of it (they didn't even take a stab at inventing phone sex). So the answer must lie more with economic motives of the workers themselves.

During the Great Compression of circa 1920 to 1970, young women showed a declining interest in climbing up the status ladder as fast as possible and in financing the acquisition and display of luxury by earning quick-and-easy money. Their increasingly modest tastes made them feel more comfortable with a slow-but-steady typing job. Sure, a stint as a pornographic actress could have raked in a lot more dough than that, but what would she do with all that money? She wasn't going to stain her feminine honor just to add unnecessarily to her bank account.

This shows the importance of looking at both supply and demand. The demand was there, but in those days it just wasn't true that "everybody has their price," or that demand would have been met.

Beginning in the '70s, and becoming more and more visible over the '80s, women shifted their priorities, becoming more fashion and status-conscious. Their interest in fashion was more fun-loving in the '70s and '80s, and not so hostile like it became in the '90s and 21st century, when the separate shift toward cocooning made them less interested in getting along with others on an interpersonal level.

And sure enough, that's when hardcore pornographic movies began to grow, continuing upward right through the present. That steady rise since the '70s/'80s is unlike the changes in interpersonal sexual behavior, where folks were more and more connected during the '70s and '80s, but segregated themselves from the opposite sex during the past 20 years and don't get it on as frequently as they used to. You see the same steady rise in lapdance clubs, and virtual outlets like phone sex, nude photos, and webcam girls.

The only area that shows a decline is prostitution, where arrest rates fell by over 40% from 1990 to 2010. I interpret that as a substitution effect from all those other outlets. They're all ways to get relief through transactions rather than interactions, and the men who pay for those things weren't so concerned with making it with a real-life partner that they'd risk disease, arrest, fines, etc.

In periods of rising inequality, the bottom chunk of society feels like the deck is stacked against them. So lower-status men, sensing the widening divide, might reason that it's not worth even bothering with the real-life interaction game, where the available women are already taken by high-status men, or have effectively taken themselves off the market by waiting around for a taken high-status man to become newly available. **

Those lower-status guys still have a libido, though, so they'll drive up the demand for commercialized sex. As long as he has enough cash or credit, his lack of status won't count against him. This effect on males comes more from inequality, while the effect on female sex workers comes more from the status-seeking trend closely related to it. Giving schlubs a lap dance, or shooting a porn scene once a week, is a quick and easy way to finance your conspicuous consumption and student loan debt (the higher ed bubble being another consequence of status-seeking).

All this predicts that we ought to find a burgeoning economy of commercialized sex during the Gilded Age and Progressive Era, as inequality rose toward its peak in the mid-1910s. Would it surprise you to learn that prostitution was widespread during the Victorian era? Perhaps a more helpful name for the period would be the Victorian-Dickensian era, to remind folks of the heartlessness and the growing disconnect between the upper and lower layers of society.

I planned to review the history before 1920 in this post, but it's already gotten long enough, so I'll save that for a follow-up. It really deserves its own post, too, since all of it will be unfamiliar to today's readers.

* Judging from ubiquitous ads in comic books, teenage boys were eager to buy X-ray specs and pocket spy telescopes in order to see girls naked, or learn the art of hypnosis in order to get her to sleepwalk into their beds and get it on against her will. You didn't see lurid stuff like that in the teenage culture of the 1980s, because boys were more socially connected to girls, went out on dates, and got what they wanted.

** Is economic inequality reflected in dating-and-mating inequality? Hunter-gatherers don't have harem organization, while concubines and other forms of polygyny are often found among more stratified groups like large-scale agriculturalists.

** Is economic inequality reflected in dating-and-mating inequality? Hunter-gatherers don't have harem organization, while concubines and other forms of polygyny are often found among more stratified groups like large-scale agriculturalists.

Categories:

Cocooning,

Crime,

Dudes and dudettes,

Economics,

Media,

Psychology,

Technology

November 10, 2013

The return of old diseases and pests with rising inequality

When the rich get richer and the poor get poorer, you'll see all sorts of new phenomena that reflect the rise in the ranks of very wealthy people, particularly if the phenomenon allows the person to engage in intra-elite status competition (a correlate, or even a cause of the inequality).

Like that show on MTV about which wealthy family could spend the most, and in the most original way, on the daughter's 16th birthday bash. As you might expect, the spoiled daughter was never grateful and often cursed the parents out or cried about how they had ruined her party. But making their daughter happy was never the point -- it was to step up the competition against the heads of other wealthy families.

How about at the low end? There may not be an upper bound to how much wealth you can own, but it would seem that you can't get any poorer than $0. Even if you count debt, most lenders only let you get so far into negative wealth before they cut you off. Can it go lower still? Yes, if we look more broadly at quality of life rather than only income or wealth.

Health is the most obvious place to look first. Now the lower bound on "poor" goes down to being dead. Before you hit the lowest low, though, you can develop all manner of sicknesses. Or contract them -- repeatedly contracting a disease will make your life miserable for a lot longer and more intensely than the slow, barely perceptible build-up toward a debilitating disease of old age.

Let's start with an example from infectious person-to-person diseases. Despite all that antibacterial soap and hand sanitizers, influenza isn't any less common than it was 15 years ago. That quick reality check tells us to be wary of technological explanations for trends in disease.

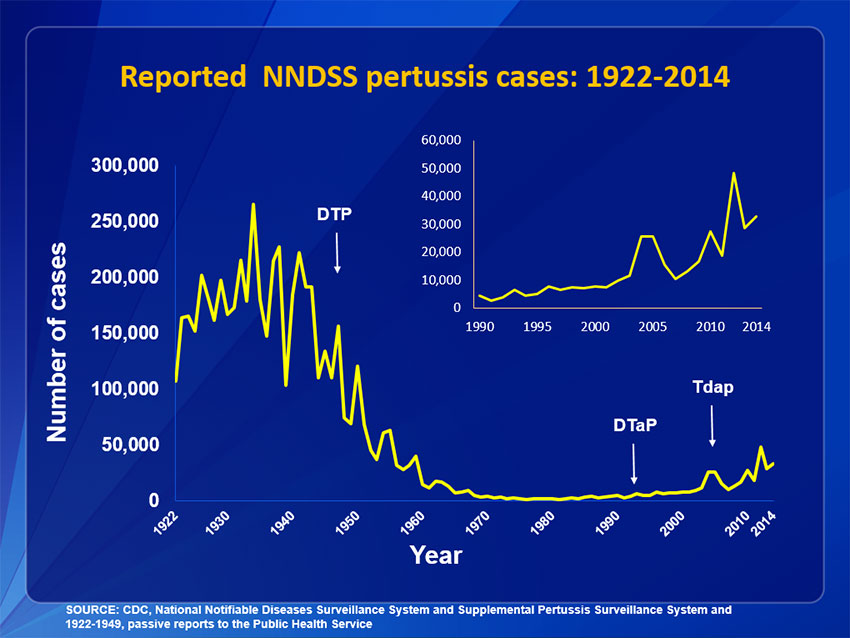

To make the point more forcefully, we need a case with data that go back a long time. Say, whooping cough (pertussis). Here is the CDC's page on trends in the disease, which contains the following chart:

Those three acronyms with arrows pointing down refer to the introduction of different vaccines, the first coming out in the late 1940s. Right away we see that they have absolutely nothing to do with the dynamics of the disease they're supposed to protect against. Incidence is rising until a peak around the mid-1930s. The steady decline begins a good 10 years before the vaccine is even introduced, and its introduction does not accelerate the decline (it decelerates after that point, if you want to make anything of its introduction at all). Without any vaccine at all, the incidence had already dropped by roughly half as of the late '40s. Since a low-point around 1980, the incidence has exploded by a factor of 50 -- despite not one but two new vaccines introduced during that time.

That graph does not match a graph of medical technology, but of inequality. Why? Beats me, but it does. It's not access to health care -- that graph shows cases, not dire outcomes like mortality. And again the main preventative measure that could be obtained from health care doesn't explain the rises and falls anyway. For crowd diseases, I lean toward blaming increasingly crappy living conditions -- not just for the private household, but the wider crappiness of the block and the neighborhood that the bottom chunk lives in. It's a return to the dilapidated tenements of the early 20th C., which were made into much nicer places during the Great Compression of 1920-1970.

You can fill in "increasingly lax regulation" for all these examples, to explain how the conditions were allowed to worsen. In general, welfare state policies and regulation go together with falling inequality.

Speaking of ramshackle apartments, what ever happened to that children's line about "Good night, sleep tight, don't let the bed bugs bite?" Well, the suckers are back. I checked Wikipedia's page on the epidemiology of bed bugs, and poked around the JAMA source. Bed bug infestation shows roughly the same dynamics as whooping cough, even though these are macroparasites instead of a contagious bacteria. They began decreasing sometime in the 1930s and bottomed out sometime around 1980, increasing since then. I never noticed any problem with them growing up, but exponential growth looks slow when it starts out -- the part where it takes off like a rocket happens a little later, during the 2000s in the case of bed bugs. By then several major cities began freaking out.

Not knowing anything about the ecology of bed bugs, I'd point again to crappy living conditions as the breeding ground, although they may certainly spread out from there to better maintained places nearby.

Immigration also plays a huge role here, since if foreigners carrying bed bugs not only drop by but stay put in our country, there's little we can do. Immigration is another correlate of inequality (see the Peter Turchin article linked in the post below). After it was choked off during the 1920s, bed bugs began to disappear. As it began rising in the '70s, it wouldn't be long until bed bugs made a comeback.

Does immigration relate to whooping cough in the same way, being brought here and maintained by a steady influx of pertussis-stricken foreigners? Probably, though someone (not me) should look into that in more detail.

Finally, there are food-borne illnesses. We all remember the deplorable sanitary conditions detailed in Upton Sinclair's muckraking novel The Jungle from the Progressive era. I don't have any data from back then or the middle of the century, but given how little hysteria there seems to have been by the 1950s, I presume that the incidence of food-borne illnesses had fallen quite a bit.

What's the picture like since then? Unfortunately the CDC's data only go back to the late '90s. Several diseases are down, while Vibrio is way up, but the main story is Salmonella -- by far the leading food-borne illness. It shows no change one way or the other since the late '90s. Eurosurveillance, however, has some data on England and Wales going back to the late '70s, and ending in the late '90s. Aside from Shigella, they all show overall increases, including the two leading offenders, Salmonella and Campylobacter.

My hunch is that the causes today are what they were in the days of The Jungle -- crappy sanitary standards among food producers who cater to the bottom chunk (or those at the top too, for all I know). That's pretty easy to get away with because most people who get sick won't report it, and won't know which of the variety of things they ate made them sick. Only if you let it really get out of hand will people complain, the FDA or USDA figures out it was you, and recalls your product.

These three examples span a range of causes of disease -- bacterial crowd disease, macroparasitic blood-sucker, and bacterial food-borne diseases. Yet they all show pretty similar dynamics, matching up with inequality and bearing little resemblance to the state of the art in medicine. They are all symptoms of the deterioration of living standards as basic government oversight falls by the wayside for such areas (again, primarily the bottom chunk is no longer being looked out for). They also reflect the divisiveness that accompanies over-production of elites and intra-elite competition. No time to look out for the little guy -- let him fend for himself.

For our neo-Dickensian society, the simplest policy improvement is to shut off immigration, including legal immigration. Why not? They carried that out in the '20s and enjoyed a half-century of falling inequality and narrowing of political polarization. Of course other changes in the popular and elite mindset took place, but we ought to begin with the policy that is the simplest to think up and to implement.

Conservatives (or the handful of populist liberals who are still hanging around) should not get side-tracked by legal vs. illegal immigration. Both increase the supply of labor, and thereby decrease its price, i.e. wages. And it'll be asymmetric -- loads more competitors for bottom-chunk jobs, and few or none for top 0.00001% jobs.

Ditto for worsening the public health burden -- whether they came here legally or not, if they stay put, they're yet another potential spreader of whooping cough, another harborer of bed bugs, another food maker with shoddy standards. And they come from places with higher rates of those plagues than we already have to deal with here. Unlike their effect on wages, though, immigrants could very well infect the elites with their diseases, however indirectly, and albeit to a lesser extent than they would infect native bottom-chunk folks who live on the same block.

Final thought: I wonder if the 1918 Spanish Flu pandemic has to do with the turbo-charged levels of globalization and open borders that reached their peak around that time. After the borders were slammed shut in the '20s, you didn't see that level of pandemic in the Western world during the Great Compression. There was that flu "pandemic" in 2009, but that will probably go down as just the first in an escalating series of major outbreaks. We aren't right at the peak for widening inequality yet, so our return of the Spanish Flu hasn't materialized just yet. The future promises to be an interesting time.

Like that show on MTV about which wealthy family could spend the most, and in the most original way, on the daughter's 16th birthday bash. As you might expect, the spoiled daughter was never grateful and often cursed the parents out or cried about how they had ruined her party. But making their daughter happy was never the point -- it was to step up the competition against the heads of other wealthy families.

How about at the low end? There may not be an upper bound to how much wealth you can own, but it would seem that you can't get any poorer than $0. Even if you count debt, most lenders only let you get so far into negative wealth before they cut you off. Can it go lower still? Yes, if we look more broadly at quality of life rather than only income or wealth.

Health is the most obvious place to look first. Now the lower bound on "poor" goes down to being dead. Before you hit the lowest low, though, you can develop all manner of sicknesses. Or contract them -- repeatedly contracting a disease will make your life miserable for a lot longer and more intensely than the slow, barely perceptible build-up toward a debilitating disease of old age.

Let's start with an example from infectious person-to-person diseases. Despite all that antibacterial soap and hand sanitizers, influenza isn't any less common than it was 15 years ago. That quick reality check tells us to be wary of technological explanations for trends in disease.

To make the point more forcefully, we need a case with data that go back a long time. Say, whooping cough (pertussis). Here is the CDC's page on trends in the disease, which contains the following chart:

Those three acronyms with arrows pointing down refer to the introduction of different vaccines, the first coming out in the late 1940s. Right away we see that they have absolutely nothing to do with the dynamics of the disease they're supposed to protect against. Incidence is rising until a peak around the mid-1930s. The steady decline begins a good 10 years before the vaccine is even introduced, and its introduction does not accelerate the decline (it decelerates after that point, if you want to make anything of its introduction at all). Without any vaccine at all, the incidence had already dropped by roughly half as of the late '40s. Since a low-point around 1980, the incidence has exploded by a factor of 50 -- despite not one but two new vaccines introduced during that time.

That graph does not match a graph of medical technology, but of inequality. Why? Beats me, but it does. It's not access to health care -- that graph shows cases, not dire outcomes like mortality. And again the main preventative measure that could be obtained from health care doesn't explain the rises and falls anyway. For crowd diseases, I lean toward blaming increasingly crappy living conditions -- not just for the private household, but the wider crappiness of the block and the neighborhood that the bottom chunk lives in. It's a return to the dilapidated tenements of the early 20th C., which were made into much nicer places during the Great Compression of 1920-1970.

You can fill in "increasingly lax regulation" for all these examples, to explain how the conditions were allowed to worsen. In general, welfare state policies and regulation go together with falling inequality.

Speaking of ramshackle apartments, what ever happened to that children's line about "Good night, sleep tight, don't let the bed bugs bite?" Well, the suckers are back. I checked Wikipedia's page on the epidemiology of bed bugs, and poked around the JAMA source. Bed bug infestation shows roughly the same dynamics as whooping cough, even though these are macroparasites instead of a contagious bacteria. They began decreasing sometime in the 1930s and bottomed out sometime around 1980, increasing since then. I never noticed any problem with them growing up, but exponential growth looks slow when it starts out -- the part where it takes off like a rocket happens a little later, during the 2000s in the case of bed bugs. By then several major cities began freaking out.

Not knowing anything about the ecology of bed bugs, I'd point again to crappy living conditions as the breeding ground, although they may certainly spread out from there to better maintained places nearby.

Immigration also plays a huge role here, since if foreigners carrying bed bugs not only drop by but stay put in our country, there's little we can do. Immigration is another correlate of inequality (see the Peter Turchin article linked in the post below). After it was choked off during the 1920s, bed bugs began to disappear. As it began rising in the '70s, it wouldn't be long until bed bugs made a comeback.

Does immigration relate to whooping cough in the same way, being brought here and maintained by a steady influx of pertussis-stricken foreigners? Probably, though someone (not me) should look into that in more detail.

Finally, there are food-borne illnesses. We all remember the deplorable sanitary conditions detailed in Upton Sinclair's muckraking novel The Jungle from the Progressive era. I don't have any data from back then or the middle of the century, but given how little hysteria there seems to have been by the 1950s, I presume that the incidence of food-borne illnesses had fallen quite a bit.

What's the picture like since then? Unfortunately the CDC's data only go back to the late '90s. Several diseases are down, while Vibrio is way up, but the main story is Salmonella -- by far the leading food-borne illness. It shows no change one way or the other since the late '90s. Eurosurveillance, however, has some data on England and Wales going back to the late '70s, and ending in the late '90s. Aside from Shigella, they all show overall increases, including the two leading offenders, Salmonella and Campylobacter.

My hunch is that the causes today are what they were in the days of The Jungle -- crappy sanitary standards among food producers who cater to the bottom chunk (or those at the top too, for all I know). That's pretty easy to get away with because most people who get sick won't report it, and won't know which of the variety of things they ate made them sick. Only if you let it really get out of hand will people complain, the FDA or USDA figures out it was you, and recalls your product.

These three examples span a range of causes of disease -- bacterial crowd disease, macroparasitic blood-sucker, and bacterial food-borne diseases. Yet they all show pretty similar dynamics, matching up with inequality and bearing little resemblance to the state of the art in medicine. They are all symptoms of the deterioration of living standards as basic government oversight falls by the wayside for such areas (again, primarily the bottom chunk is no longer being looked out for). They also reflect the divisiveness that accompanies over-production of elites and intra-elite competition. No time to look out for the little guy -- let him fend for himself.

For our neo-Dickensian society, the simplest policy improvement is to shut off immigration, including legal immigration. Why not? They carried that out in the '20s and enjoyed a half-century of falling inequality and narrowing of political polarization. Of course other changes in the popular and elite mindset took place, but we ought to begin with the policy that is the simplest to think up and to implement.

Conservatives (or the handful of populist liberals who are still hanging around) should not get side-tracked by legal vs. illegal immigration. Both increase the supply of labor, and thereby decrease its price, i.e. wages. And it'll be asymmetric -- loads more competitors for bottom-chunk jobs, and few or none for top 0.00001% jobs.

Ditto for worsening the public health burden -- whether they came here legally or not, if they stay put, they're yet another potential spreader of whooping cough, another harborer of bed bugs, another food maker with shoddy standards. And they come from places with higher rates of those plagues than we already have to deal with here. Unlike their effect on wages, though, immigrants could very well infect the elites with their diseases, however indirectly, and albeit to a lesser extent than they would infect native bottom-chunk folks who live on the same block.

Final thought: I wonder if the 1918 Spanish Flu pandemic has to do with the turbo-charged levels of globalization and open borders that reached their peak around that time. After the borders were slammed shut in the '20s, you didn't see that level of pandemic in the Western world during the Great Compression. There was that flu "pandemic" in 2009, but that will probably go down as just the first in an escalating series of major outbreaks. We aren't right at the peak for widening inequality yet, so our return of the Spanish Flu hasn't materialized just yet. The future promises to be an interesting time.

Categories:

Architecture,

Economics,

Food,

Generations,

Health,

Human Biodiversity,

Morality,

Politics,

Technology

November 9, 2013

Hazing: Elite in-fighting, not solidarity building

With the topic of locker room bullying in the headlines, former tight end Cam Cleeland reveals the details of a hazing incident from 1998 when he joined the New Orleans Saints. It started out as a "running the gauntlet" initiation with a pillowcase over his head, but then ended when his teammate Andre Royal walloped him in the face with a sock full of coins, shattering his eye socket and nearly costing him his vision in that eye.

Sounds more like the beginning of a revenge killing than of closer team solidarity.

Yet many commentators, whether they're sports fans or not, feel compelled to defend hazing, pointing to the greater solidarity it builds among those who pass through the initiation rites. A quick reality check points to the opposite conclusion -- that in this sports culture of free agents and widespread shameless showboating, hazing cannot be a solidarity-building rite, but must be another example of "let the Devil take the hindmost" morality.

Normal initiation rites are highly circumscribed in what they do and do not allow to happen. The participants must adhere so rigidly to the formulas that we speak of thoughtless and mechanical processes as "ritualistic." That doesn't mean that the initiates won't suffer pain -- often they do. However, what they'll suffer is predictable from the ritual tradition. Any one of the pain-givers who wanted to escalate would be seen as violating a sacred tradition. This keeps sociopaths in check, and the predictability provides a certain amount of trust on the part of the initiates.

The fixed formulas also ensure that what is happening to the initiates has already been endured by the tormentors themselves, back when they were the grunts. "I had to go through it -- now it's your turn." Fair is fair.

Hazing has neither of these features because it is not a rigidly defined and adhered to set of formulas. What is being suffered by today's grunts was not necessarily suffered by their tormentors back in their time. As a new teammate, Andre Royal didn't have his eye socket smashed in with a sock full of coins. That's something that he cowardly inflicted on another without enduring it himself first.

And hazing is more free-form: those who deviate from the tradition by escalating will not be shamed for violating a tradition that is more sacred and important than their individual fleeting whim. If anything, it sets up a contest among the senior members to see who can push the envelope the most in dealing out pain to the initiates. Which one of us seniors can out-lash the others? And which one of us can come up with the most creative individual performance in lashing the grunts?

Hence, not only does it not build solidarity among the newer group and the established group, it opens up an individualistic status contest even within the senior pain-givers.

A larger reality check over all of American history shows the pattern more tellingly: hazing became more common and more talked-about during periods of widening societal divisiveness, and declined when such divisiveness began to narrow. Here is an excellent overview article by Peter Turchin on the dynamics of inequality in America since its founding. He views the over-production of elites and their consequent intra-elite competition as the driver behind economic inequality and political polarization.

After a quiet period most associated with the Era of Good Feelings circa 1820, inequality began to grow during the mid-19th C, became distressingly wide by the Gilded Age, and only peaked around 1920. From then until the mid-1970s, inequality fell dramatically. And since then, it's been taking off like a rocket again.

Here is a graph showing how common the phrase "hazing" has been in the books in Google's digital library (Ngram):

It lines right up with the inequality dynamics, which are closely tied to intra-elite competition.

Next, see this historical list of hazing-related deaths compiled by people who research these things. Who knows how complete it is, but at least they were ignorant of the historical ideas being put forth here. They too seem to rise and fall in waves. The first one begins in the mid-19th C and peaks in the mid-1910s to early '20s.

There are already proportionally fewer by the second half of the '20s, and none at all listed for the '30s, with only 3 in the '40s. There's a handful in the '50s and only 2 in the '60s. They also tend to be indirect effects of hazing rather than cruelty or humiliation. Most of the small number from the '50s involve getting dropped off far from campus and then getting hit by a car while walking back on the side of the road.

By the mid-'70s, that lull is over, and we enter the wave of hazing that our culture is still in.

Wikipedia has a similar list of milestones in hazing history. Note that during the Gilded Age (1873), the New York Times ran an article titled, "West Point.; "Hazing" at the Academy – An Evil That Should be Entirely Rooted Out." They didn't run an article like that during WWII, which by all of these measures was near a low-point in hazing. We think of that period as being near the height for self-sacrifice and camaraderie in the military, suggesting again that hazing is corrosive to solidarity.

During times of over-production of elites, the established ones fight nastier to hold onto their positions against the aspirants. That is the simplest way to interpret hazing in the broader context -- the entrenched elite struggling to keep out so many would-be usurpers. They shamelessly co-opt the language of preserving tradition and promoting solidarity, while fooling around with the ritual as it pleases them, sowing the seeds of resentment within the group, hiring themselves out like a mercenary rather than a committed lifelong team member, and putting on the most theatrically self-aggrandizing displays for ordinary achievements in their line of work.

Touchdoooown! BOO-ya! In your FACE! SUCK it! Who's the best? Uh-huh, that's me! Uh-huh, that's me! Dance to the right, to the left! To the right, to the left! But not you, you didn't score! Not you, you didn't score! WHEW!!!

Is it any wonder that normal people with healthy minds have become disgusted with the big-time sports culture over the past couple decades? About as uplifting and motivating to watch as a pitbull free-for-all in the black ghetto, or a cockfight in some brown slum.

Sounds more like the beginning of a revenge killing than of closer team solidarity.

Yet many commentators, whether they're sports fans or not, feel compelled to defend hazing, pointing to the greater solidarity it builds among those who pass through the initiation rites. A quick reality check points to the opposite conclusion -- that in this sports culture of free agents and widespread shameless showboating, hazing cannot be a solidarity-building rite, but must be another example of "let the Devil take the hindmost" morality.

Normal initiation rites are highly circumscribed in what they do and do not allow to happen. The participants must adhere so rigidly to the formulas that we speak of thoughtless and mechanical processes as "ritualistic." That doesn't mean that the initiates won't suffer pain -- often they do. However, what they'll suffer is predictable from the ritual tradition. Any one of the pain-givers who wanted to escalate would be seen as violating a sacred tradition. This keeps sociopaths in check, and the predictability provides a certain amount of trust on the part of the initiates.

The fixed formulas also ensure that what is happening to the initiates has already been endured by the tormentors themselves, back when they were the grunts. "I had to go through it -- now it's your turn." Fair is fair.

Hazing has neither of these features because it is not a rigidly defined and adhered to set of formulas. What is being suffered by today's grunts was not necessarily suffered by their tormentors back in their time. As a new teammate, Andre Royal didn't have his eye socket smashed in with a sock full of coins. That's something that he cowardly inflicted on another without enduring it himself first.

And hazing is more free-form: those who deviate from the tradition by escalating will not be shamed for violating a tradition that is more sacred and important than their individual fleeting whim. If anything, it sets up a contest among the senior members to see who can push the envelope the most in dealing out pain to the initiates. Which one of us seniors can out-lash the others? And which one of us can come up with the most creative individual performance in lashing the grunts?

Hence, not only does it not build solidarity among the newer group and the established group, it opens up an individualistic status contest even within the senior pain-givers.

A larger reality check over all of American history shows the pattern more tellingly: hazing became more common and more talked-about during periods of widening societal divisiveness, and declined when such divisiveness began to narrow. Here is an excellent overview article by Peter Turchin on the dynamics of inequality in America since its founding. He views the over-production of elites and their consequent intra-elite competition as the driver behind economic inequality and political polarization.

After a quiet period most associated with the Era of Good Feelings circa 1820, inequality began to grow during the mid-19th C, became distressingly wide by the Gilded Age, and only peaked around 1920. From then until the mid-1970s, inequality fell dramatically. And since then, it's been taking off like a rocket again.

Here is a graph showing how common the phrase "hazing" has been in the books in Google's digital library (Ngram):

It lines right up with the inequality dynamics, which are closely tied to intra-elite competition.

Next, see this historical list of hazing-related deaths compiled by people who research these things. Who knows how complete it is, but at least they were ignorant of the historical ideas being put forth here. They too seem to rise and fall in waves. The first one begins in the mid-19th C and peaks in the mid-1910s to early '20s.

There are already proportionally fewer by the second half of the '20s, and none at all listed for the '30s, with only 3 in the '40s. There's a handful in the '50s and only 2 in the '60s. They also tend to be indirect effects of hazing rather than cruelty or humiliation. Most of the small number from the '50s involve getting dropped off far from campus and then getting hit by a car while walking back on the side of the road.

By the mid-'70s, that lull is over, and we enter the wave of hazing that our culture is still in.

Wikipedia has a similar list of milestones in hazing history. Note that during the Gilded Age (1873), the New York Times ran an article titled, "West Point.; "Hazing" at the Academy – An Evil That Should be Entirely Rooted Out." They didn't run an article like that during WWII, which by all of these measures was near a low-point in hazing. We think of that period as being near the height for self-sacrifice and camaraderie in the military, suggesting again that hazing is corrosive to solidarity.

During times of over-production of elites, the established ones fight nastier to hold onto their positions against the aspirants. That is the simplest way to interpret hazing in the broader context -- the entrenched elite struggling to keep out so many would-be usurpers. They shamelessly co-opt the language of preserving tradition and promoting solidarity, while fooling around with the ritual as it pleases them, sowing the seeds of resentment within the group, hiring themselves out like a mercenary rather than a committed lifelong team member, and putting on the most theatrically self-aggrandizing displays for ordinary achievements in their line of work.

Touchdoooown! BOO-ya! In your FACE! SUCK it! Who's the best? Uh-huh, that's me! Uh-huh, that's me! Dance to the right, to the left! To the right, to the left! But not you, you didn't score! Not you, you didn't score! WHEW!!!

Is it any wonder that normal people with healthy minds have become disgusted with the big-time sports culture over the past couple decades? About as uplifting and motivating to watch as a pitbull free-for-all in the black ghetto, or a cockfight in some brown slum.

Categories:

Crime,

Economics,

Health,

Morality,

Politics,

Pop culture,

Psychology,

Sports,

Violence

November 8, 2013

Irony, and irony-squared: Or, transparent vs. opaque irony

The corrosion of sincerity since the Nineties has seen not only a quantitative change -- with irony becoming steadily more pervasive -- but also a qualitative shift from what I'll call transparent irony of the '90s and early 2000s, to the opaque irony of the 21st century.

Both forms involve a mapping from inner feelings to outward expressions that is disingenuous. With transparent irony, an observer can still work their way back from expression to feeling, whereas opaque irony leaves the observer puzzled and confused about what feeling they should infer from a given expression.

Beginning in the early-to-mid '90s, ironic behavior primarily took the form of layering a veneer of distaste, ridicule, etc., over top of a feeling of enjoyment or approval. If you liked the guitar solo from "Sweet Child O' Mine," you had to re-enact it with the most caricatured air guitar solo of all time -- every time it played. Otherwise you were just plain old enjoying it, and that was suspicious -- you don't want the Expression Police to knock on the door, do you? Then do the ridiculous air guitar thing, and it'll provide a fig leaf of plausible deniability if they show up on your front porch. "Nah man, I was just like, *making fun of* those cheesy '80s guitar solos. You don't think I'd actually dig something so ridiculous... so uh, am I cleared to go back inside now?"

That was also the time when we started to cultivate an anti-style, wearing things that were so far from our inner, core identity that it was like going out in blackface. Rebellious teenagers wearing musty-looking cardigans -- wacky! But, not confusing, no more so than a well-heeled white performing in blackface during the Jazz Age. I had an anti-authoritarian streak in high school, so the first election season that I could vote, 1998, I found some old Nixon/Agnew pins to wear on my field jacket.

So, we made fun of the stuff we liked, and appeared to endorse things we wanted nothing to do with. Once you knew that shift in mindset, though, you could then figure out how we felt from what we expressed -- just infer the opposite of what you normally would. Why did we make observers go through this mental rotation task every time they wanted to figure out what we really meant? Again, it provided plausible deniability when the Expression Police came to our door.

In times when normal behavior becomes criminalized, and warped behavior elevated, being a normal rebellious young person was suddenly like being a dissident in an authoritarian state. Plaster the outside of your business with posters in support of the stodgy old leader, and put up dissident posters inside -- that have been suitably yet not blasphemously defaced. That was a fine line to walk back then -- how to poke enough fun in order to evade detection as a true supporter, while not going so far as to defile or disrespect your idols.

I associate this transparent irony with Family Guy and the early Simpsons on TV, and grunge and alternative music, both how they sounded on the radio / album, and how their videos looked on MTV. Family Guy and bands like Weezer show how this form might lead toward the sentimental -- when you have an official cover of not liking something, you might give yourself free rein to (inwardly) indulge in it way more than if you were just being sincere.

Irony-squared, or opaque irony, is a different beast altogether. Any given expression of enjoyment is as likely to have come from a positive as a negative feeling. And any expression of ridicule could just as well come from truly enjoying it as it could from truly hating it.

Does that selfie of you with a kabuki "angry" expression mean you're truly angry or just goofing around? Who can tell -- you make that face equally often in angering and in uplifting circumstances. Expressions are indiscriminate. Ditto for the kabuki "elated surprise" face -- you make that when you just found out you got a pay raise, as well as when you're at your own mother's funeral.

I picked up the same vibe from Millennials who got into the whole '80s revival in the mid-to-late 2000s. Were they wearing those clothes from American Apparel because they really liked the style, or was it another case of blackface ("check it out, cheesy '80s fashion")? It was as likely to be one as the other.

Emotional expression in indie music is indiscriminately neutral and "bleh," and it is indiscriminately angsty in emo. Most singer-songwriter stuff takes the form of indiscriminate attention-seeking through constant signals of needing to be rescued -- the musical equivalent of "the boy who cried wolf."

This impossible-to-pin-down character is not just a switcharoo to fool the authorities. In the '90s, we were all in on the joke -- whether we thought the put-on was subversively cool or just plain annoying, we could still decode the underlying feeling from the expression. Young people these days can't figure out how the hell anybody else is feeling, what they actually like, what they truly despise, and what they really mean. Plausible deniability is no longer a smoke-screen for when the Expression Police roll up, but a pervasive fog that atomizes the entirety of the younger generation (and anybody attempting to interact with them).

You see this especially clearly in their awkward "social" lives. They prefer gathering together in a hive, like a computer cluster at the campus library, or any of the other public spaces that have been converted to that purpose, like coffee shops. They'll make glance at / make eye contact with people they're interested in, but will then look randomly around the room too -- as though to suggest that nothing in particular steered their eyes to the person they made eye contact with. Then they stay hunkered down over their glowing screens, whether there's someone there they're interested in or not. It's indiscriminate looking-around or indiscriminate shutting-the-blinds.

Same thing on Facebook and texting, where they prefer even more to "interact." Does some boy like some girl? Well, maybe Boy will work up the courage to leave an interested yet non-committal comment on Girl's wall (or whatever that retarded thing is called now). But wait, will Girl interpret that as an honest signal of Boy's interest? Uh... uh... shit, I know, let's leave a cover-your-ass comment, too, just in case. "....hashtag unintentionally awkward Facebook comments." Great! Now she'll never know how I really feel about her! Dodged a bullet there...

And it's not just pussified boys who act that way. If random Millennial boys came up to talk to a Millennial girl at Starbucks, she'd get creeped out. Whether they were subtle or obvious wouldn't matter, and would only determine how quickly she'd scurry away. If they went on Facebook / texted her, openly telling her how they felt about her, she'd get creeped out if it were "attracted," and outraged if it were "concerned and critical." Better just shut up about how you really feel, no matter what that may be.

The inscrutable nature of Millennial signal-sending leads to profound confusion not only of what others feel, but about one's own worthiness. If you can't tell where your peers truly rank you for attractiveness, trustworthiness, fun-loving-ness, etc., then what are you to do? You can try to make up the answer yourself -- "obviously I'm in the top 1%, just like everybody else" -- but your brain isn't that stupid. It knows that the answers must come from peers, not family or ego, in order to not be biased, and particularly from a wide range of peers, rather than only those who have a motive to kiss your butt, or only those who want to tear you down.

With no signals coming in that can be at least somewhat pinned down, the data are simply not entered at all. It's as though all the surveys about you came back filled entirely with "Chose Not To Answer." This is worse than having a wide margin-of-error -- that at least provides an estimate in the center somewhere. This is like all blank answers, or maybe "illegible" answers is more apt.

This is at the root of all sorts of dysmorphias among young people today. Not just body dysmorphia from not knowing how attractive your peers find you. Any quality of yours is subject to self-doubt and unending anxiety about what all those missing answers would have contained if people would just say what they honestly felt.

Kids these days are hesitant to approach and reluctant to respond, and it's a positive feedback loop. As the signal-sender, they give indiscriminate signals, which tells the other side not to get involved with them -- no point if you can't tell what they mean. Then, even if someone else gave them a clear signal for a change, as the signal-receiver they wouldn't know how to respond. With greater experience, you develop a knack for how to respond in situation A, B, C, and so on. And you judge how appropriate your response was by how the other party responded in turn. But since you've never gotten feedback on your feedback, you don't know how to react even when the situation is clearly A. So you just pick a response at random -- another indiscriminate choice, when you have no clue what to do.

That clumsiness frustrates the other side -- "I open up for once, and this is what happens. I'm such an idiot for bothering in the first place. Guard up forever." Interpersonal awkwardness will never go away so long as the drive toward opaque irony remains in place.

Millennials do occasionally get together as friends or boyfriend-girlfriend. But none of the "courtship" can refer to feelings. It's all about how "we would make sense together." (Feel the passion sizzling off of the page.) It's just a fact that your qualities and my qualities would produce a mutually beneficial arrangement. I think some of them are hoping that after the initial agreement has been made, they can shed the contractual / for-hire way of interacting with each other and talking about their relationship. But once you've chosen that mindset, it's hard to choose another. Cognitive dissonance, fear of the unfamiliar, general awkwardness, etc.

So they stay in relationships as long as they agree to renew their contract, or as long as they can convince one another that they still "make sense" as a couple. They do lapse into the occasional expression of genuine affection, and given how rare it is, they'll likely remember that for the rest of the relationship. On the whole, though, the contractual frame of mind makes for a spirit-crushing atmosphere, and they are easily estranged from each other.

It should be clear that I trace both forms of irony back to the cocooning trend of the past 20 or so years, and that openness and sincerity came back starting in the late '50s or so and peaked in the '80s. What about before then? I pick up the same vibes from the mid-century as from today. The hipsters today are incredibly marginal figures, and an observer from 60 years in the future might have trouble tracking them down. But everybody knows about the Beatniks from the mid-century. Was their malaise just a put-on (transparent), or were they indiscriminately disaffected (opaque)? Beats me, seems like both, though.

And then there was all that cracking wise -- was the wise-cracking a put-on, or did they crack wise no matter whether they enjoyed or loathed what they were commenting on? Was the wise-cracking dame truly interested in you, or was she as likely to crack wise if she hated your guts too? Ditto all the wise-cracking from the fellas. In that environment, better to just not show much feeling at all, and hunker down in your own little world (The Man in the Gray Flannel Suit, The Feminine Mystique). Mid-century Americans have an inscrutable quality, where they're either indiscriminately chipper (campy ads where the whole family is wearing a big gay smile), or indiscriminately morose (the Existentialist, Hopper, Age of Anxiety, Beatnik crowd).

Inequality and competitiveness were falling and near a low-point, so at least they didn't make status contests out of ironic displays like we do. Or at least not to the same extent -- they still had all those layers upon layers of wise-cracking between men and women in both film noir and in screwball comedies, to see who would gain the upper hand. Just give it a rest already, you buncha fast-talkin' broads...

* * *

I considered including a helpful mathematical analogy in the main post, then I figured it wouldn't be that helpful to most people -- would drive them away. If that's you, you can split now.

For those who remember functions from algebra class, we can think of sincerity, irony, and irony-squared as three simple types of functions that map feeling (on the x axis) into expression (on the y).

Sincerity is something like f(x) = x, an increasing function through the origin. The only important thing is that positive feelings lead to positive expressions, negative feelings to negative expressions. It passes both the horizontal and vertical "line tests," so it is invertible -- from a given expression, you can tell what feeling produced it.

Transparent irony is like f(x) = -x, a decreasing function through the origin, and orthogonal to the sincerity function. We can rotate the axes 90 degrees and obtain the sincerity function, and then we're back to the first case. It's as though people rotated the axes, then applied the sincerity function. OK, we'll just rotate the axes back, and then invert the sincerity function.

So we can still recover the feeling that produced a given expression. It just requires an extra step that makes it annoying for most people to bother with, especially strangers who don't really care much about how we really feel. The orthogonality of these first two functions gives the second one a shock value, but one that wears off quickly as we learn to apply a simple axis rotation first.

Opaque irony is like f(x) = x^2 (or -x^2), and f(x) = |x| (or -|x|), concave up (or down) with a minimum (or maximum) on the y axis, and symmetric about it. This passes the vertical line test -- every feeling leads to a certain expression. But it fails the horizontal line test, and so is not a one-to-one correspondence that would let us invert it. For any expression, there are two possible feelings that could have produced it -- and they're opposite in sign and equal in magnitude.

Axis rotation is no help this time: rotating 90 degrees would yield something that wasn't even a function, a relation that took a given feeling and was equally likely to express it in a positive as a negative way. We are stuck with expressions only, uncertain whether a positive or a negative feeling produced them.

This isn't just a nerdy exercise, an analogy for its own sake. I think each of these formal properties of the model reflects something real about how the mind works. Like doing mental rotation of the axes when transforming the transparent irony function into the more familiar sincerity one. That rotation would also swap which one was feeling and which one was expression -- part of that whole post-modernist playing around with / questioning of / inversion of the surface and substance that was a big thing at the time, at least among those who were also pushing the (transparent) irony thing at the same time.

Both forms involve a mapping from inner feelings to outward expressions that is disingenuous. With transparent irony, an observer can still work their way back from expression to feeling, whereas opaque irony leaves the observer puzzled and confused about what feeling they should infer from a given expression.

Beginning in the early-to-mid '90s, ironic behavior primarily took the form of layering a veneer of distaste, ridicule, etc., over top of a feeling of enjoyment or approval. If you liked the guitar solo from "Sweet Child O' Mine," you had to re-enact it with the most caricatured air guitar solo of all time -- every time it played. Otherwise you were just plain old enjoying it, and that was suspicious -- you don't want the Expression Police to knock on the door, do you? Then do the ridiculous air guitar thing, and it'll provide a fig leaf of plausible deniability if they show up on your front porch. "Nah man, I was just like, *making fun of* those cheesy '80s guitar solos. You don't think I'd actually dig something so ridiculous... so uh, am I cleared to go back inside now?"

That was also the time when we started to cultivate an anti-style, wearing things that were so far from our inner, core identity that it was like going out in blackface. Rebellious teenagers wearing musty-looking cardigans -- wacky! But, not confusing, no more so than a well-heeled white performing in blackface during the Jazz Age. I had an anti-authoritarian streak in high school, so the first election season that I could vote, 1998, I found some old Nixon/Agnew pins to wear on my field jacket.

So, we made fun of the stuff we liked, and appeared to endorse things we wanted nothing to do with. Once you knew that shift in mindset, though, you could then figure out how we felt from what we expressed -- just infer the opposite of what you normally would. Why did we make observers go through this mental rotation task every time they wanted to figure out what we really meant? Again, it provided plausible deniability when the Expression Police came to our door.

In times when normal behavior becomes criminalized, and warped behavior elevated, being a normal rebellious young person was suddenly like being a dissident in an authoritarian state. Plaster the outside of your business with posters in support of the stodgy old leader, and put up dissident posters inside -- that have been suitably yet not blasphemously defaced. That was a fine line to walk back then -- how to poke enough fun in order to evade detection as a true supporter, while not going so far as to defile or disrespect your idols.

I associate this transparent irony with Family Guy and the early Simpsons on TV, and grunge and alternative music, both how they sounded on the radio / album, and how their videos looked on MTV. Family Guy and bands like Weezer show how this form might lead toward the sentimental -- when you have an official cover of not liking something, you might give yourself free rein to (inwardly) indulge in it way more than if you were just being sincere.

Irony-squared, or opaque irony, is a different beast altogether. Any given expression of enjoyment is as likely to have come from a positive as a negative feeling. And any expression of ridicule could just as well come from truly enjoying it as it could from truly hating it.

Does that selfie of you with a kabuki "angry" expression mean you're truly angry or just goofing around? Who can tell -- you make that face equally often in angering and in uplifting circumstances. Expressions are indiscriminate. Ditto for the kabuki "elated surprise" face -- you make that when you just found out you got a pay raise, as well as when you're at your own mother's funeral.

I picked up the same vibe from Millennials who got into the whole '80s revival in the mid-to-late 2000s. Were they wearing those clothes from American Apparel because they really liked the style, or was it another case of blackface ("check it out, cheesy '80s fashion")? It was as likely to be one as the other.

Emotional expression in indie music is indiscriminately neutral and "bleh," and it is indiscriminately angsty in emo. Most singer-songwriter stuff takes the form of indiscriminate attention-seeking through constant signals of needing to be rescued -- the musical equivalent of "the boy who cried wolf."

This impossible-to-pin-down character is not just a switcharoo to fool the authorities. In the '90s, we were all in on the joke -- whether we thought the put-on was subversively cool or just plain annoying, we could still decode the underlying feeling from the expression. Young people these days can't figure out how the hell anybody else is feeling, what they actually like, what they truly despise, and what they really mean. Plausible deniability is no longer a smoke-screen for when the Expression Police roll up, but a pervasive fog that atomizes the entirety of the younger generation (and anybody attempting to interact with them).

You see this especially clearly in their awkward "social" lives. They prefer gathering together in a hive, like a computer cluster at the campus library, or any of the other public spaces that have been converted to that purpose, like coffee shops. They'll make glance at / make eye contact with people they're interested in, but will then look randomly around the room too -- as though to suggest that nothing in particular steered their eyes to the person they made eye contact with. Then they stay hunkered down over their glowing screens, whether there's someone there they're interested in or not. It's indiscriminate looking-around or indiscriminate shutting-the-blinds.

Same thing on Facebook and texting, where they prefer even more to "interact." Does some boy like some girl? Well, maybe Boy will work up the courage to leave an interested yet non-committal comment on Girl's wall (or whatever that retarded thing is called now). But wait, will Girl interpret that as an honest signal of Boy's interest? Uh... uh... shit, I know, let's leave a cover-your-ass comment, too, just in case. "....hashtag unintentionally awkward Facebook comments." Great! Now she'll never know how I really feel about her! Dodged a bullet there...

And it's not just pussified boys who act that way. If random Millennial boys came up to talk to a Millennial girl at Starbucks, she'd get creeped out. Whether they were subtle or obvious wouldn't matter, and would only determine how quickly she'd scurry away. If they went on Facebook / texted her, openly telling her how they felt about her, she'd get creeped out if it were "attracted," and outraged if it were "concerned and critical." Better just shut up about how you really feel, no matter what that may be.

The inscrutable nature of Millennial signal-sending leads to profound confusion not only of what others feel, but about one's own worthiness. If you can't tell where your peers truly rank you for attractiveness, trustworthiness, fun-loving-ness, etc., then what are you to do? You can try to make up the answer yourself -- "obviously I'm in the top 1%, just like everybody else" -- but your brain isn't that stupid. It knows that the answers must come from peers, not family or ego, in order to not be biased, and particularly from a wide range of peers, rather than only those who have a motive to kiss your butt, or only those who want to tear you down.

With no signals coming in that can be at least somewhat pinned down, the data are simply not entered at all. It's as though all the surveys about you came back filled entirely with "Chose Not To Answer." This is worse than having a wide margin-of-error -- that at least provides an estimate in the center somewhere. This is like all blank answers, or maybe "illegible" answers is more apt.

This is at the root of all sorts of dysmorphias among young people today. Not just body dysmorphia from not knowing how attractive your peers find you. Any quality of yours is subject to self-doubt and unending anxiety about what all those missing answers would have contained if people would just say what they honestly felt.

Kids these days are hesitant to approach and reluctant to respond, and it's a positive feedback loop. As the signal-sender, they give indiscriminate signals, which tells the other side not to get involved with them -- no point if you can't tell what they mean. Then, even if someone else gave them a clear signal for a change, as the signal-receiver they wouldn't know how to respond. With greater experience, you develop a knack for how to respond in situation A, B, C, and so on. And you judge how appropriate your response was by how the other party responded in turn. But since you've never gotten feedback on your feedback, you don't know how to react even when the situation is clearly A. So you just pick a response at random -- another indiscriminate choice, when you have no clue what to do.

That clumsiness frustrates the other side -- "I open up for once, and this is what happens. I'm such an idiot for bothering in the first place. Guard up forever." Interpersonal awkwardness will never go away so long as the drive toward opaque irony remains in place.

Millennials do occasionally get together as friends or boyfriend-girlfriend. But none of the "courtship" can refer to feelings. It's all about how "we would make sense together." (Feel the passion sizzling off of the page.) It's just a fact that your qualities and my qualities would produce a mutually beneficial arrangement. I think some of them are hoping that after the initial agreement has been made, they can shed the contractual / for-hire way of interacting with each other and talking about their relationship. But once you've chosen that mindset, it's hard to choose another. Cognitive dissonance, fear of the unfamiliar, general awkwardness, etc.

So they stay in relationships as long as they agree to renew their contract, or as long as they can convince one another that they still "make sense" as a couple. They do lapse into the occasional expression of genuine affection, and given how rare it is, they'll likely remember that for the rest of the relationship. On the whole, though, the contractual frame of mind makes for a spirit-crushing atmosphere, and they are easily estranged from each other.

It should be clear that I trace both forms of irony back to the cocooning trend of the past 20 or so years, and that openness and sincerity came back starting in the late '50s or so and peaked in the '80s. What about before then? I pick up the same vibes from the mid-century as from today. The hipsters today are incredibly marginal figures, and an observer from 60 years in the future might have trouble tracking them down. But everybody knows about the Beatniks from the mid-century. Was their malaise just a put-on (transparent), or were they indiscriminately disaffected (opaque)? Beats me, seems like both, though.

And then there was all that cracking wise -- was the wise-cracking a put-on, or did they crack wise no matter whether they enjoyed or loathed what they were commenting on? Was the wise-cracking dame truly interested in you, or was she as likely to crack wise if she hated your guts too? Ditto all the wise-cracking from the fellas. In that environment, better to just not show much feeling at all, and hunker down in your own little world (The Man in the Gray Flannel Suit, The Feminine Mystique). Mid-century Americans have an inscrutable quality, where they're either indiscriminately chipper (campy ads where the whole family is wearing a big gay smile), or indiscriminately morose (the Existentialist, Hopper, Age of Anxiety, Beatnik crowd).

Inequality and competitiveness were falling and near a low-point, so at least they didn't make status contests out of ironic displays like we do. Or at least not to the same extent -- they still had all those layers upon layers of wise-cracking between men and women in both film noir and in screwball comedies, to see who would gain the upper hand. Just give it a rest already, you buncha fast-talkin' broads...

* * *

I considered including a helpful mathematical analogy in the main post, then I figured it wouldn't be that helpful to most people -- would drive them away. If that's you, you can split now.

For those who remember functions from algebra class, we can think of sincerity, irony, and irony-squared as three simple types of functions that map feeling (on the x axis) into expression (on the y).

Sincerity is something like f(x) = x, an increasing function through the origin. The only important thing is that positive feelings lead to positive expressions, negative feelings to negative expressions. It passes both the horizontal and vertical "line tests," so it is invertible -- from a given expression, you can tell what feeling produced it.

Transparent irony is like f(x) = -x, a decreasing function through the origin, and orthogonal to the sincerity function. We can rotate the axes 90 degrees and obtain the sincerity function, and then we're back to the first case. It's as though people rotated the axes, then applied the sincerity function. OK, we'll just rotate the axes back, and then invert the sincerity function.

So we can still recover the feeling that produced a given expression. It just requires an extra step that makes it annoying for most people to bother with, especially strangers who don't really care much about how we really feel. The orthogonality of these first two functions gives the second one a shock value, but one that wears off quickly as we learn to apply a simple axis rotation first.

Opaque irony is like f(x) = x^2 (or -x^2), and f(x) = |x| (or -|x|), concave up (or down) with a minimum (or maximum) on the y axis, and symmetric about it. This passes the vertical line test -- every feeling leads to a certain expression. But it fails the horizontal line test, and so is not a one-to-one correspondence that would let us invert it. For any expression, there are two possible feelings that could have produced it -- and they're opposite in sign and equal in magnitude.

Axis rotation is no help this time: rotating 90 degrees would yield something that wasn't even a function, a relation that took a given feeling and was equally likely to express it in a positive as a negative way. We are stuck with expressions only, uncertain whether a positive or a negative feeling produced them.

This isn't just a nerdy exercise, an analogy for its own sake. I think each of these formal properties of the model reflects something real about how the mind works. Like doing mental rotation of the axes when transforming the transparent irony function into the more familiar sincerity one. That rotation would also swap which one was feeling and which one was expression -- part of that whole post-modernist playing around with / questioning of / inversion of the surface and substance that was a big thing at the time, at least among those who were also pushing the (transparent) irony thing at the same time.

Categories:

Cocooning,

Design,

Dudes and dudettes,

Generations,

Health,

Language,

Literature,

Music,

Pop culture,

Psychology,

Television

November 5, 2013

Sanitizing, skin allergies, anti-scratch mittens... hand deformities?

My dad posted a picture of me as a newborn on Facebook, and my sister-in-law left a comment about "Back before every child wore mittens to prevent them from scratching their face." I thought I'd heard about or seen all of the ways that helicopter parents have been bubble-wrapping their kids these days, but apparently not.

That was my first thought anyway -- more paranoid, pointless shock absorption. Still, it didn't sound like the rest of the examples, where kids used to get exposed to some shock that made their bodies and minds respond by becoming stronger. Scratching at your face wasn't something that "kids used to do" in the good old days. I asked my parents, and they confirmed that none of us clawed at our own faces when we were little.

Amazon sells a wide variety of no-scratch mittens for babies, such as these. One customer review says that her baby "tends to scratch his face a lot and I never seem to be able to keep his nails short enough." And from the comments section to a post on the topic at babycenter.com: "My kids have all scratched themselves to bleeding at some point! It happens so fast."

Why do such a large fraction of babies today scratch themselves bad enough and frequently enough that there could be a thriving industry selling no-scratch mittens?

My hunch is that the worse itching is part of the rise in skin allergies and of overly sensitive skin that doesn't meet the clinical definition of allergy, sensitivity vs. robustness being a continuum. The CDC has been collecting data on childhood allergies since 1997, and skin allergies show the greatest increase, from 7.4% to 12.5% around 2010. Treating skin sensitivity as a normal distribution, that translates into a rise in the average by 0.3 standard deviations -- a huge change for less than 15 years. It's as though the average kid had shrunk in height by 9/10 of an inch during that brief period.

Here is the CDC's report, and here is a US News article with quotes from doctors saying, Yeah, this rise in allergies is real, we see it every day. Such widespread skin allergies do not extend far into the past; the fact that the CDC only started collecting data in 1997 suggests that the epidemic began in the early or mid-1990s.

That was right when the society in general, and parents in particular, began to show severe symptoms of hygiene-focused OCD, in a note-for-note revival of the mid-century and Victorian periods. Suddenly antibacterial soap was the norm, hand sanitizer was everywhere, and housebound women took out their disinfectant arsenal and began conducting daily napalm raids on all surfaces in the home (as well as expecting -- and hence receiving -- the same treatment of surfaces in the public spaces that they patronized).

Locking kids indoors all day and requiring shoes outside also kept them from reaping the health benefits of normal human skin contact with grass, dirt, leaves, tree bark, and so on. It's not as though there was an epidemic of hookworm in white suburban front yards during the '90s; it was pure paranoia.

So these no-scratch mittens may be responding to a real, new need, but it is a problem that the helicopter parents created themselves. Of course, setting up yet another barrier between the kid's skin and the environment will only worsen the problem of over-sanitization as they age.

Not to mention deforming their hands in subtle or perhaps gross ways -- no way to know until it's too late. Wearing mittens prevents you from gripping, holding, lifting, manipulating, probing / exploring... well, just about everything your hands and fingers were meant to do, other than striking. Preventing such fundamental activities during the sensitive window of childhood development will likely have the same effects as forcing kids to wear shoes all throughout childhood -- namely, flatfoot. Barefoot children never have flat feet, while shod children do (at high rates if the shoes are close-toed and ill-fitting).

Getting banged up a little bit is good for your bones. Otherwise your body assumes they don't need to be strong and doesn't invest much resources in maintaining their strength. And sure enough, the data show that children's bones became tougher against accidents from 1970 to 1990, then got weaker in 2000, and presumably worse still by 2010. Kids these days can easily shatter both hands by taking a simple fall -- it's pathetic.

Their parents could have saved the costs of treating broken hands and wrists by just letting their kids be kids. A scrape here and a bruise there go away by themselves, no costs at all to the parents. That is, unless the parents are the type who can't stand the psychological pain of witnessing their kid take a hard fall. Get over it, I don't know what else to say. Stop blubbering like a hysterical woman about protecting them from harm. You took plenty of hard falls yourself when you were growing up, and you're not only still here, you were stronger than your wimpy kids are at the same age.

Prudent parenting says don't change the regime to the total opposite of what you had, if that seemed to work just fine. It's possible, even likely, that an opposite upbringing will have the opposite effect.

Helicopter parenting is therefore an extreme form of the Progressive, blank slate, engineering approach to human development. There is nothing conservative about it whatsoever,. It emphasizes harm avoidance over everything else (Haidt), and it presumes to know better than God's plan, Mother Nature, adaptation by natural selection, the wisdom of tradition, or whatever else you want to call it.

In the view from the '80s, a child was a new person with their own internal nature who had to be accommodated within the family and integrated into the wider community (socialization). Their nature would see to altering their personality and behavior as they worked their way through this process. In the new view, he's just a hunk of raw material to be micro-sculpted by the technocratic parents, and who needs to be regularly dusted and sterilized like a dinner table, as though his nature had no way of responding to and building up resistance to foreign debris.